The Agent Data Layer 2026: How to Turn Messy Business Data Into Reliable AI Actions

Why agents fail even when the model is “good”

Most agent failures aren’t model failures. They’re data failures.

Agents break because the business data they rely on is:

- inconsistent across tools

- duplicated

- missing fields

- full of outdated notes

- trapped in PDFs and emails

- mixed with private info that shouldn’t be used

- formatted differently in every system

So the agent does what it can: it guesses. Guessing is what looks like “hallucination” in production.

If you want agents that act reliably, you need a clean layer between the agent and your chaos.

That layer is the Agent Data Layer.

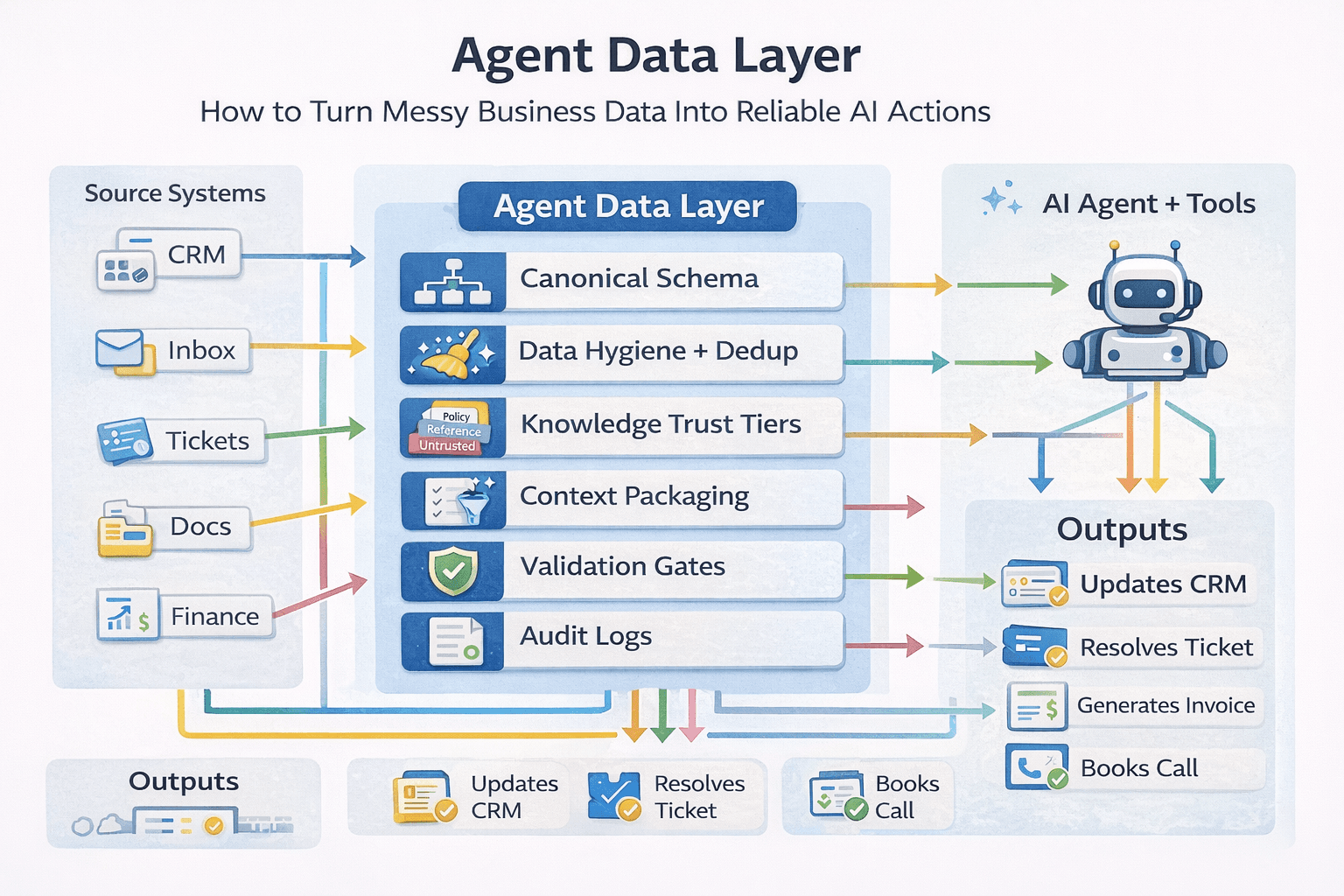

What the Agent Data Layer actually is

The Agent Data Layer is a structured, governed interface that converts raw business systems into agent-ready context and actions.

It does three things:

- standardizes data across systems

- packages context in formats the agent can use (without bloat)

- enforces rules around privacy, trust, and action permissions

It’s not a new database. It’s a design pattern that stops agents from making stuff up.

The three types of data agents need

Operational data

What’s happening right now.

Examples: CRM records, open tickets, invoices, calendars, pipeline stages.

Knowledge data

What should be true.

Examples: SOPs, policies, product docs, pricing rules, internal playbooks.

Memory data

What’s useful later.

Examples: preferences, recurring client requirements, past decisions, recent summaries.

Mix these together and you get a confused agent. Separate them and your agent becomes dependable.

The 6 components of a production Agent Data Layer

1) Canonical entities

Define your core objects once:

- Contact

- Company

- Deal

- Ticket

- Invoice

- Project

- Task

Then map every tool to the same schema. If “company_name” is three different fields in three systems, the agent will behave like it has brain damage.

2) Data hygiene and deduplication rules

Agents hate duplicates. Humans tolerate them. That’s why agents fail.

You need rules like:

- one contact per email

- one company per domain

- merge logic for duplicates

- “latest wins” for stale fields

- confidence scores for uncertain merges

3) Trust tiers for knowledge

Not all docs are equal. Label them:

- “policy truth” (approved SOPs)

- “reference” (notes, wiki pages)

- “untrusted” (random uploads, scraped pages)

Agents should treat “policy truth” as rules and treat “untrusted” as suggestions.

4) Context packaging

Stop dumping raw data into prompts.

Instead, package context as:

- short structured summaries

- small, relevant retrieved chunks

- validated fields only

- timestamps and source markers

The agent doesn’t need your entire CRM. It needs the 8 fields that matter for the next action.

5) Validation gates before action

Before the agent updates anything, validate:

- schema correctness

- required fields present

- values within allowed ranges

- no forbidden data included

- action is permitted for this workflow

This is what stops “agent did the wrong thing” incidents.

6) Auditability

For every agent decision, you should be able to answer:

- what data did it use

- where did it come from

- what transformation happened

- what action was executed

- who approved if required

Auditability is how you scale agents without fear.

The practical workflow: how an agent should interact with business data

A stable agent flow looks like:

- Retrieve canonical entity (Contact/Company/Deal)

- Pull only relevant operational fields

- Retrieve knowledge from trusted tier sources

- Produce a structured action plan

- Validate plan against rules

- Execute via tools

- Write back structured updates

- Log the run for audits

That’s how you get “agent as operator” instead of “agent as improviser.”

The agency opportunity: sell the data layer, not the model

Everyone can plug a model into a workflow.

Most businesses cannot:

- clean their CRM

- normalize their data

- structure their knowledge

- build validation + audit systems

So your offer becomes:

- implement Agent Data Layer

- clean and standardize key entities

- build retrieval and knowledge trust tiers

- add validation gates and audit logs

- then deploy agents on top

This is high-value work because it solves the real bottleneck.

Agents don’t fail because AI isn’t smart enough.

They fail because business data is a swamp.

Build an Agent Data Layer and your automations stop guessing. They start executing reliably.

Neuronex Intel

System Admin