Agent Memory in 2026: How to Build AI Agents That Remember Without Becoming Creepy or Wrong

Why agent memory is suddenly the main event

Agents without memory are goldfish with access to your business systems. They can be helpful in the moment, but they don’t build continuity.

Agents with memory can:

- remember preferences and recurring tasks

- carry context across days and weeks

- improve performance over time

- reduce repeated questions and token waste

- feel closer to “AGI-style” continuity

But memory also introduces the two failures that kill trust:

- remembering the wrong thing

- remembering too much

So building memory is not “store everything.” It’s designing memory like a product.

The two kinds of memory every real agent needs

Semantic memory

Stable facts and preferences that should not change often.

Examples:

- brand tone and style rules

- business hours, policies, pricing rules

- preferred tools and workflows

- product specifications and FAQs

Semantic memory should be curated, validated, and slow-changing.

Episodic memory

Short-lived context from specific interactions and events.

Examples:

- a client said “follow up next week”

- a ticket was escalated due to a billing mismatch

- a sales lead asked about integration details

- last week’s campaign results and learnings

Episodic memory should decay, summarize, and be retrievable only when relevant.

If you mix these, your agent becomes confidently inconsistent.

The biggest mistake: “memory as a dump”

Most teams build memory like this:

- throw transcripts into a vector DB

- retrieve random chunks later

- hope it “remembers” correctly

This creates three problems:

- memory hallucinations (agent invents continuity that didn’t happen)

- memory pollution (irrelevant chunks dominate retrieval)

- creepy personalization (it recalls details that feel invasive)

The fix is to treat memory as a controlled system, not a storage bucket.

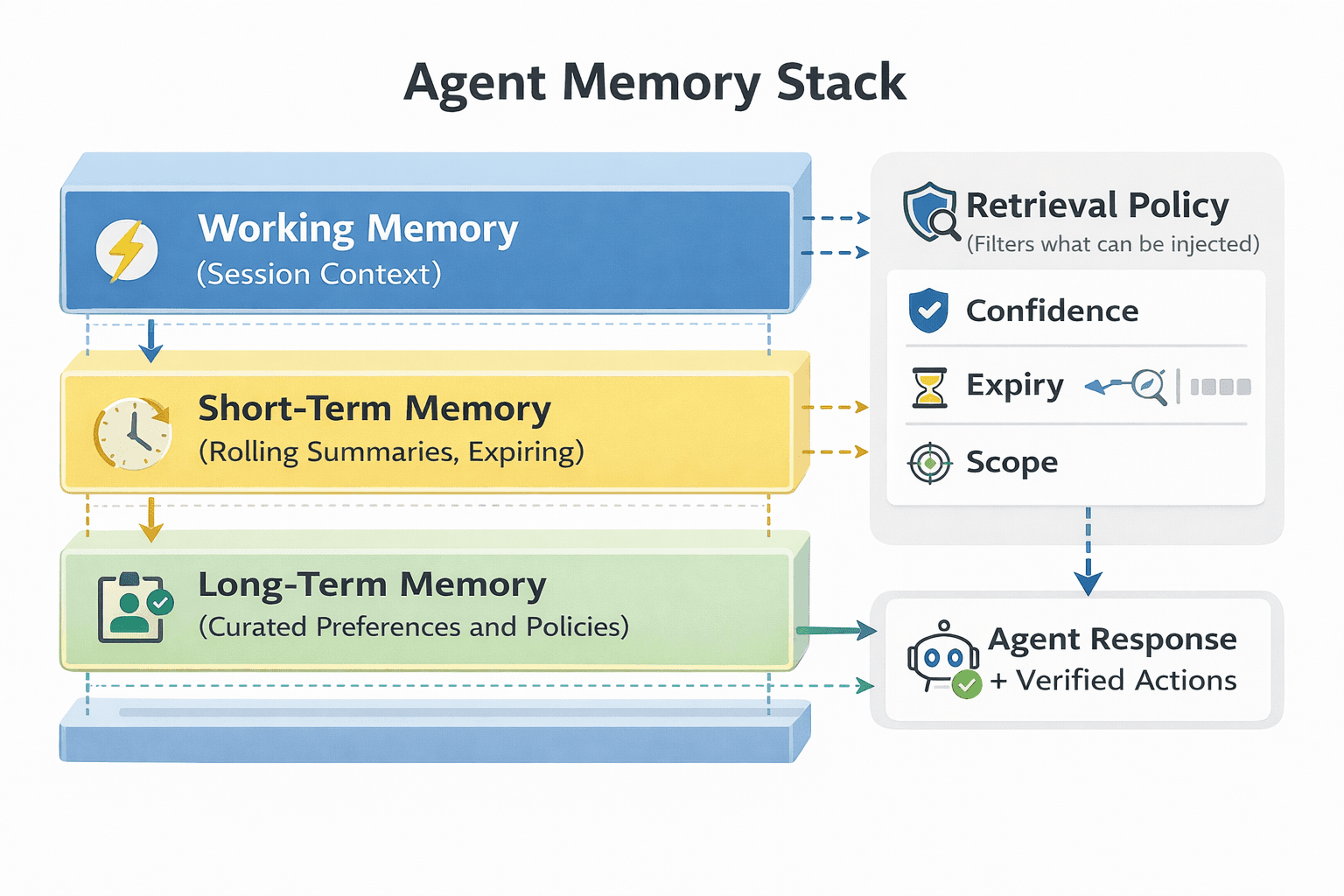

A production-grade memory architecture

A real agent memory stack should include these layers.

Working memory

What the agent uses right now inside the current session.

- short and explicit

- always updated

- never permanently stored by default

Short-term memory

A rolling summary of recent interactions.

- summarised, not raw

- expires automatically

- used for continuity over days, not months

Long-term memory

Curated, durable info that actually improves outcomes.

- preferences

- stable facts

- playbooks and SOPs

- validated “known truths”

Memory retrieval policy

The rules for when memory is allowed to influence output.

- retrieve only when task requires it

- show what memory was used (internally for debugging)

- prefer high-confidence memories over vague ones

- block sensitive categories unless explicitly needed

Without a retrieval policy, memory becomes a liability.

How to make memory accurate instead of delusional

Accuracy comes from constraints and verification.

Use write rules

Only store memory when:

- it is stable

- it will matter later

- it is validated

- it improves outcomes

- it is not sensitive or creepy

A simple rule: if it doesn’t change future decisions, don’t store it.

Store structured memory, not blobs

Store memory as objects:

- type: preference, policy, fact, workflow

- scope: personal, team, org

- confidence: high/medium/low

- timestamp and expiry

- source: where it came from (chat, doc, admin input)

This prevents “random text chunk” memory chaos.

Add verification before acting

When memory affects actions (sending emails, changing records), the agent should:

- confirm key details

- cross-check the source system

- prefer live tool reads over stale memory

Memory should guide. Tools should verify.

How to avoid creepy memory while still personalizing

The line is simple: memory should feel like competence, not surveillance.

Good memory feels like:

- “you prefer short summaries”

- “your brand tone is punchy and direct”

- “you use HubSpot and Slack”

- “your SOP requires approval before sending invoices”

Creepy memory feels like:

- personal life details that don’t improve work

- old irrelevant events resurfacing

- sensitive stuff remembered forever

Best practice: keep memory tied to workflow, business preferences, and explicit user benefit.

What agent memory enables for agencies

This is where you can sell retainers.

Memory-powered agents can deliver:

- persistent lead follow-up behavior

- client-specific tone and messaging consistency

- faster onboarding for new team members

- fewer repeated explanations and lower token costs

- compounding performance over time

You don’t sell “memory.” You sell:

- “this agent gets better every week”

- “it learns your process”

- “it stops asking dumb repeat questions”

That’s what clients actually want.

Agent memory is the difference between a chatbot and an operator.

But memory done wrong creates hallucinations, privacy problems, and creepy behavior. Memory done right makes the agent feel competent, consistent, and genuinely useful.

Build memory like a system: structured, scoped, validated, retrievable only when needed, and designed to decay where appropriate.

Neuronex Intel

System Admin