Agent Observability 2026: How to Monitor, Debug, and Improve AI Agents in Production

Why AI agents fail differently than normal software

Traditional software fails in predictable ways: a function throws, a service times out, a database errors. AI agents fail like creative interns with confidence issues.

Agent failures usually look like:

- the agent “decides” the wrong plan

- it calls the right tool with the wrong schema

- it loops because it thinks it’s being helpful

- it produces a polished answer that’s subtly wrong

- it works perfectly in your demo and dies on real messy inputs

If you don’t have observability, you can’t tell whether the problem is:

- the prompt

- the model choice

- retrieval quality

- tool reliability

- rate limiting

- or plain user ambiguity

No logs means no fixes. No fixes means the agent becomes a monthly embarrassment.

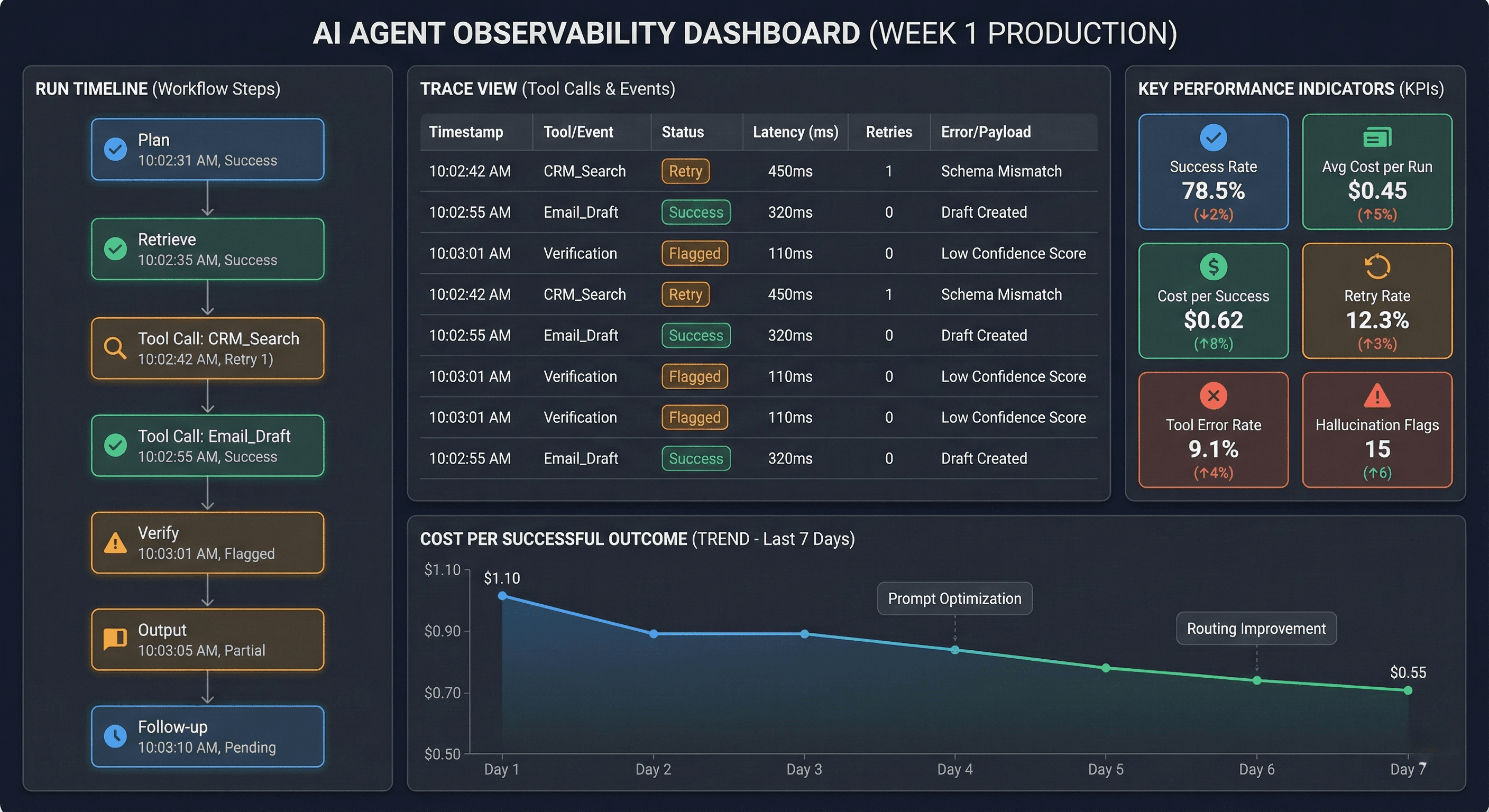

What “agent observability” actually means

Agent observability is the ability to answer these questions for any run:

- What did the agent decide to do and why?

- What context did it use and where did it come from?

- Which tools did it call, with what inputs, and what happened?

- How many retries did it take?

- How much did it cost?

- Did the outcome match what the business wanted?

This is not optional. It’s the difference between “agent automation” and “random expensive behavior.”

The observability stack for AI agents

To run agents in production, you need visibility across five layers.

Trace timeline

A run should have a timeline like:

Plan → Retrieve → Tool Call → Verify → Output → Follow-up

Each step needs timestamp, duration, and success state.

Tool call logs

For every tool call, capture:

- tool name

- arguments

- response payload

- status codes and errors

- retries and backoff

- latency

Tool calls are the biggest failure source in real agent systems. If you can’t see them, you can’t fix them.

Context and retrieval logs

When the agent retrieves documents or memory, log:

- what store it searched

- what chunks it retrieved

- scores or ranking info

- how many tokens were injected

- whether citations or grounding were used

This is how you catch “garbage retrieval” that makes the agent hallucinate confidently.

Cost and budget metrics

Track per run:

- input tokens

- output tokens

- tool calls count

- total runtime

- total cost

- cache hits vs misses

Then enforce budgets, because agents will happily bankrupt you with infinite curiosity.

Outcome metrics

This is the part people skip, then wonder why nothing improves.

Define success per workflow:

- sales: booked meetings, qualified replies, pipeline created

- ops: tickets resolved, escalations reduced, time-to-close improved

- data: correct fields extracted, accuracy thresholds met

- engineering: tests passed, PR merged, issue closed

If you don’t measure outcomes, you’re measuring vibes.

What to log every single time an agent runs

If you only implement one thing, implement this.

Log fields you want on every run:

- run_id, agent_id, workflow_id

- user or account id (if multi-tenant)

- start time, end time, runtime

- model used, reasoning mode used, routing decision

- prompt version and tool schema version

- retrieved sources count and tokens injected

- full tool call list with statuses

- retry count and reasons

- final output type (message, action, ticket update, email sent)

- outcome status (success, partial, failed)

- cost estimate

- failure category if not successful

This becomes your “black box recorder.”

The 5 failure modes you will see in week one

Agents don’t fail randomly. They fail in patterns.

Tool schema mismatch

The agent calls the right tool with the wrong shape.

Fix: strict tool schemas, structured outputs, retries capped.

Retrieval pollution

The agent pulls irrelevant context and then “reasons” from it.

Fix: better chunking, reranking, fewer chunks, retrieve-first discipline.

Infinite helpfulness loop

The agent keeps trying because it thinks more steps = better.

Fix: step budgets, stop conditions, escalation rules.

Overconfident wrong answers

It returns something polished that’s subtly incorrect.

Fix: verification steps, citations, cross-check tools, constrained outputs.

Prompt regressions

You tweak the prompt and suddenly everything breaks.

Fix: versioned prompts, evaluation harness, rollback.

Budgets: how to stop agents from melting your wallet

Agents need hard limits. Not suggestions.

Set budgets like:

- max tool calls per run

- max retries per tool

- max total runtime

- max total tokens

- max retrieval chunks injected

- max cost per successful task

Then define what happens when budgets are hit:

- stop and ask the user

- escalate to human review

- return partial output with clear next step

If your agent has no budget, it’s not autonomous. It’s uncontrolled.

The evaluation harness: prompt regression testing for agents

If you are serious, you treat your agent like software.

Build a test set of real cases:

- normal cases

- edge cases

- ambiguous cases

- failure cases

- adversarial cases

Then run them automatically when you change:

- prompts

- tool schemas

- models

- retrieval settings

- routing logic

Track:

- success rate

- cost per success

- time per run

- tool failure rate

- output format validity

- outcome metrics

This is how you stop “one prompt tweak” from wrecking your entire system.

The agency offer that prints money

Here’s the actual productized service nobody is selling properly:

Agent Monitoring + Optimization Retainer

You do:

- install observability

- set budgets and stop conditions

- categorize failures

- improve retrieval and routing

- run weekly evals and regressions

- deliver monthly performance reports

Clients pay for outcomes and stability, not for “we built an agent once and left.”

This is the difference between a one-off build and a recurring revenue system.

AI agents are not a feature. They are living systems.

If you don’t monitor them, they drift. If they drift, they fail.

If they fail, humans take back the work.

And then your “automation” becomes a fancy cost center.

Agent observability is the layer that turns agents into something you can actually trust in production.

Neuronex Intel

System Admin