AI Agent Data Leakage 2026: How Sensitive Info Escapes Through Prompts, Tools, Logs, and “Helpful” Outputs

Why agent data leakage is the real risk in 2026

Most teams think the risk is the model being “wrong.” That’s annoying, but manageable.

The real risk is the model being helpful while accidentally leaking:

- customer PII

- internal pricing and contracts

- API keys and credentials

- employee data

- private documents

- financial records

Once you connect an agent to business systems, your attack surface explodes. Data leakage doesn’t require a hacker. It often happens from normal operations and sloppy design.

The four main ways agents leak sensitive data

Prompt leakage

Sensitive data gets shoved into the prompt as “context” and then:

- copied into the final output

- included in summaries

- repeated in follow-up responses

- exposed through “explain your reasoning” requests

If your agent prompt includes secrets, you’re basically storing secrets in a place designed to generate text.

Tool output leakage

Tools return raw payloads containing sensitive fields, and the agent:

- pastes them into chat

- references them in citations

- uses them in emails

- stores them in memory

- logs them verbatim

Tool responses are the number one leak vector because they contain real data.

Logging leakage

Teams log everything for debugging. Great. Then the logs become the breach.

Common “oops”:

- logging full prompts and tool payloads

- storing raw documents in traces

- shipping logs into third-party observability platforms

- leaving logs accessible to too many internal users

Logs are rarely secured at the level they should be.

Output leakage

The agent generates text. That text is now a distribution channel.

Sensitive data can leak through:

- customer support replies

- internal Slack messages

- auto-generated reports

- emails

- exported documents

If you don’t enforce output redaction, the agent will eventually publish something it shouldn’t.

What counts as sensitive data for agents

If you’re building for clients, assume these categories are sensitive by default:

- PII: names, emails, phone numbers, addresses

- financial: invoices, bank details, payment history

- credentials: API keys, tokens, passwords

- contracts: pricing, terms, negotiations

- HR: salaries, performance notes, private messages

- health and legal: anything regulated or high-risk

Your agent system needs to treat sensitive data like radioactive material: handle it, use it when needed, but don’t casually sprinkle it everywhere.

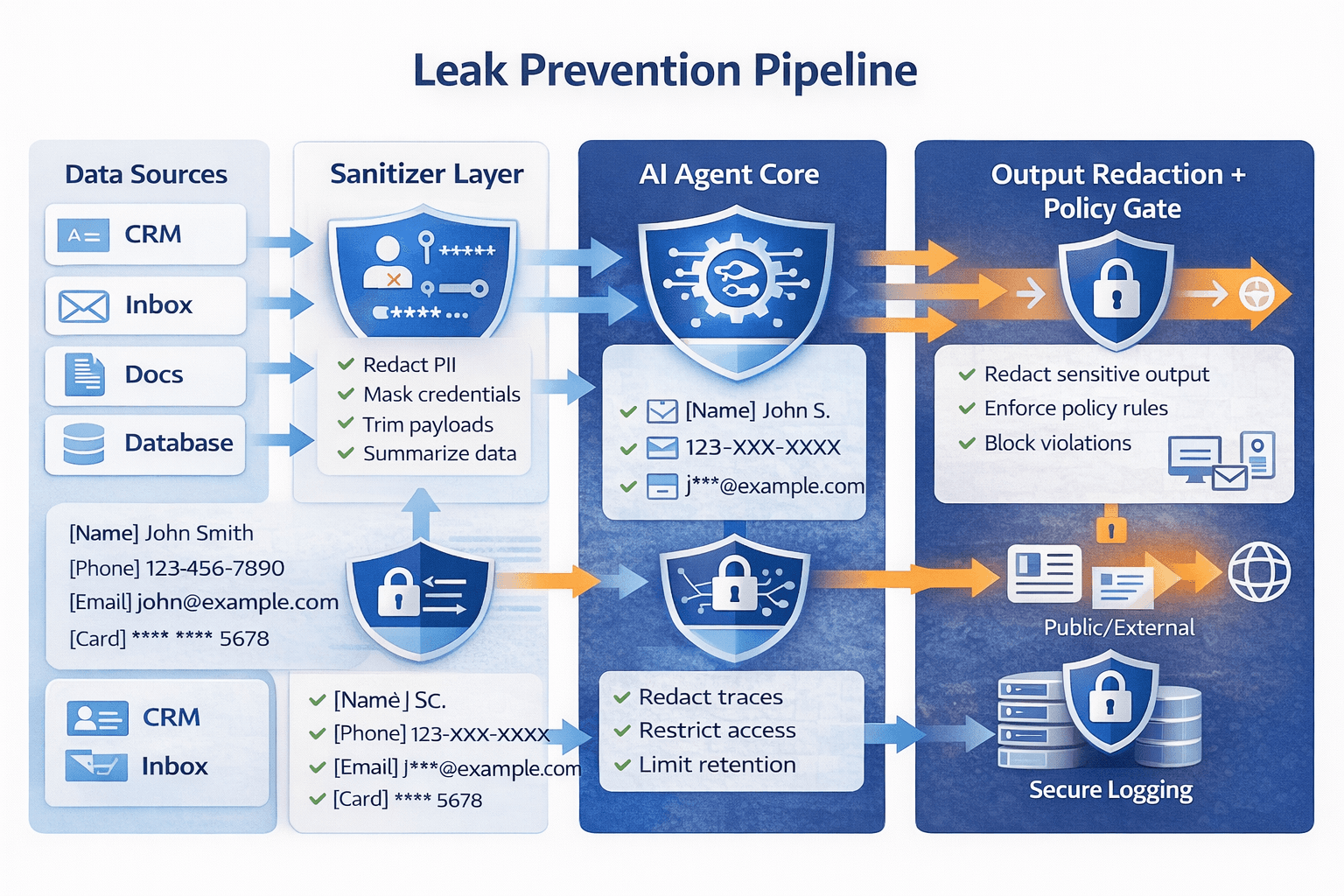

The prevention stack: how to stop leaks before they happen

Principle 1: Don’t put secrets in prompts

Prompts should contain instructions and minimal context, not full records.

Instead of feeding the agent raw CRM rows, pass:

- only the fields needed for the current step

- redacted identifiers unless absolutely necessary

- references to data, not the data itself

Principle 2: Sanitize tool outputs before the model sees them

Put a “sanitizer layer” between tools and the agent.

Sanitizer jobs:

- redact PII fields not required

- remove credentials and tokens

- truncate long payloads

- strip irrelevant sections

- convert payloads into minimal structured summaries

Your model should see “just enough to act,” not everything.

Principle 3: Redaction on output, always

Before any external message is sent, run redaction checks that detect:

- email addresses

- phone numbers

- addresses

- financial identifiers

- tokens and key-like patterns

Then block or require approval when redaction triggers.

Principle 4: Separate memory from raw data

If your agent has memory, do not store raw payloads. Store:

- preferences

- approved facts

- summarized workflow learnings

- stable policies

Memory should be curated, scoped, and expiring. Otherwise memory becomes your leak archive.

Principle 5: Lock down logs like they’re customer data

Agent logs should be treated as sensitive by default.

Best practice patterns:

- avoid logging raw prompts and raw tool payloads

- hash or redact sensitive fields before storage

- restrict log access with least privilege

- set retention limits

- separate dev logs from production logs

- audit access to logs

Logs are where leaks hide for months.

Guardrails that actually work in production

Least privilege for tools

Agents should not have broad access. Use:

- read-only tools by default

- scoped credentials per workflow

- per-tenant segmentation

- time-limited tokens

- strict allowlists for actions

Action gating for anything external

Anything going out of your organization should be gated:

- emails

- public content

- external Slack/Teams messages

- reports exported to clients

High-trust outputs should require either:

- human approval

- or strict redaction + policy validation

Policy-based output constraints

Enforce rules like:

- “Never include full customer identifiers in outbound messages”

- “Never include internal pricing unless explicitly requested by authorized users”

- “Never include tokens, secrets, keys, or access links”

Treat this like a policy engine, not a prompt.

The agency angle: this is a premium service

Most agencies build agents and walk away. That’s reckless.

A serious agency sells:

- secure agent design

- redaction and sanitization layers

- logging governance

- tenant isolation

- monthly privacy and security reviews

Clients will pay for “safe automation” because the alternative is legal, reputational, and operational pain.

Agent data leakage is not theoretical. It’s the default outcome of building fast without guardrails.

If you want agents that survive in real businesses, you need a prevention stack: sanitize inputs, constrain tools, redact outputs, and secure logs.

That’s how you build automation that scales without becoming a liability.

Neuronex Intel

System Admin