AI Agent Data Quality 2026: How to Stop Agents From Polluting Your CRM, Tickets, and Ops Systems

Why data pollution is the silent killer of agent automation

When agents fail loudly, you notice.

When agents fail quietly, they “work” while slowly ruining your systems:

- duplicate contacts and companies

- wrong deal stages

- incorrect ticket categories

- messy notes that nobody trusts

- missing required fields

- inconsistent formats across records

- phantom “updates” that overwrite good info

This doesn’t look like a model problem. It looks like “the CRM is a mess.”

And once the CRM becomes a mess, every automation gets worse.

If you want agents that scale, you need data quality controls.

The four ways agents pollute business systems

1) Duplicate creation

Agent can’t confidently match a record, so it creates a new one.

Then sales teams get three versions of the same person.

2) Field drift

Agent writes values that are “close enough” but not valid:

- inconsistent dates

- wrong currency formats

- free-text in enumerated fields

- country and address formats that break reporting

3) Overwriting good data with bad data

The agent updates fields with lower-confidence info because it’s trying to be helpful.

4) Fake certainty

Agent fills unknown fields with guesses.

This is the biggest hidden poison because it looks clean while being wrong.

The “Agent Write Policy” every system needs

The simplest fix is a write policy that defines what agents can and can’t write.

Your rules should look like this:

- Agents can write only to approved fields

- Agents must never overwrite “verified” fields

- Agents must never fill unknowns with guesses

- Agents must attach confidence and source to updates

- Agents must request approval when confidence is below threshold

- Agents must pass validation before any write is executed

This one policy prevents 80% of CRM pollution instantly.

Use confidence tiers like a grown-up

Every agent-generated update should be labeled:

High confidence

- safe to write automatically

- Example: extracted email from a verified signature block.

Medium confidence

- write to “suggested” fields or require lightweight approval

- Example: inferred job title from LinkedIn snippet.

Low confidence

- do not write

- ask for confirmation

- Example: guessing company size, guessing location from context, guessing decision-maker.

Agents should not be allowed to “complete” data by imagination.

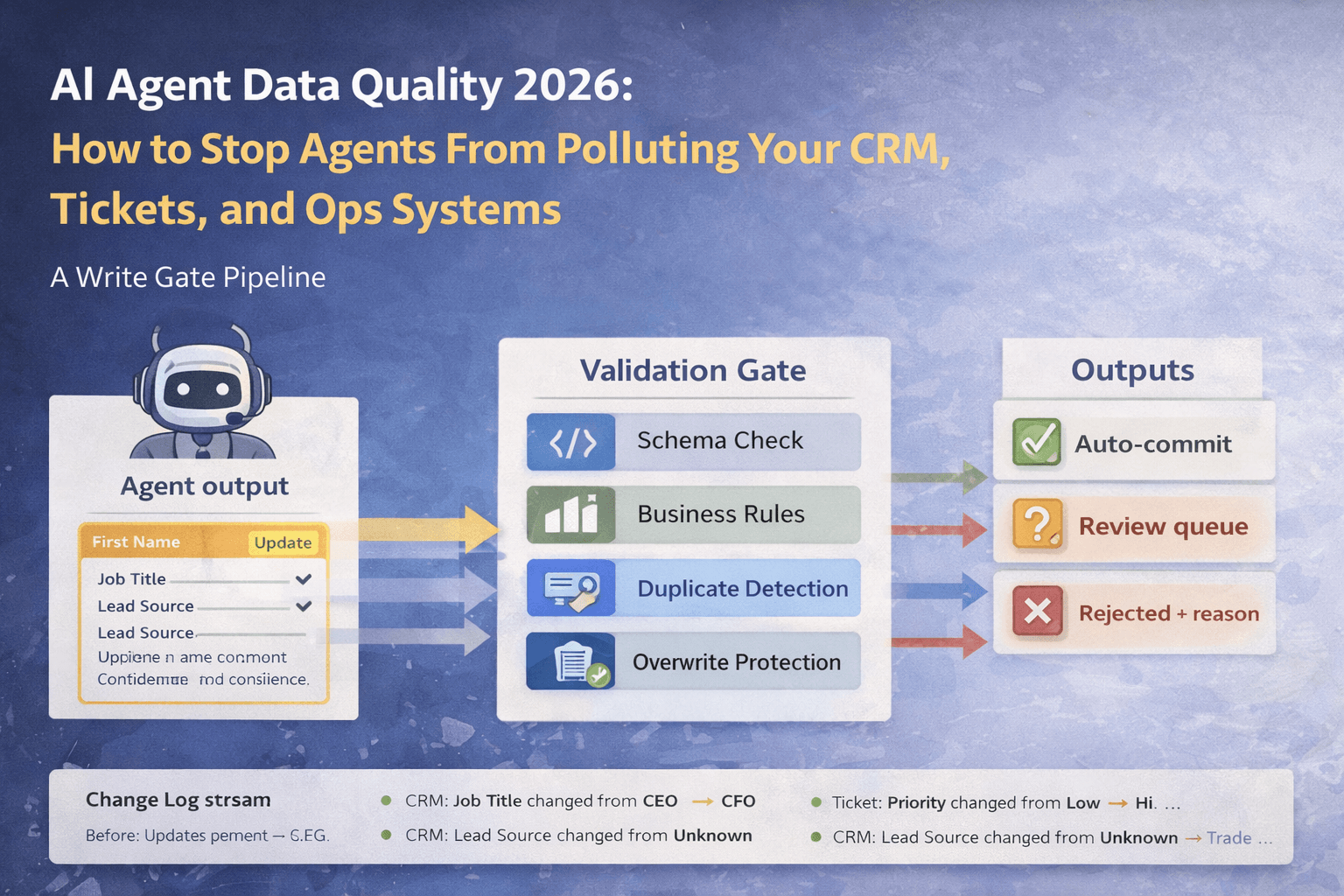

The validation gate that prevents garbage writes

Before any update hits your systems, validate:

Schema validation

- correct types

- correct formats

- required fields present

- enums only accept allowed values

Business validation

- deal stage can’t jump backward without reason

- ticket priority must match keywords or SLA rules

- invoice totals must match line-item math

- country must match allowed regions for that client

Duplicate checks

- email match = same contact

- domain match + company name similarity = probable same company

- phone match = same contact

- fuzzy matching for names only if combined with a second identifier

If validation fails, the update gets rejected or routed to approval.

The “suggest then commit” pattern

The best pattern for data writes is:

- Agent proposes updates in structured format

- System validates and scores risk

- Low-risk fields auto-commit

- Medium-risk fields go to a review queue

- High-risk actions require explicit approval

This keeps automation moving while preventing slow-motion system corruption.

The non-negotiable: keep an immutable change log

If agents write to systems, you need:

- before value

- after value

- confidence score

- source reference

- agent identity

- timestamp

- approval status if applicable

This is how you:

- roll back mistakes

- explain changes

- debug drift

- prove governance to clients

No audit trail means no trust.

The agency offer this turns into

This is a premium “ops layer” offer.

You sell:

- field mapping + write policy setup

- validation gate implementation

- dedupe logic and merge rules

- confidence scoring + approval queue

- monthly hygiene reporting and cleanup

Clients love it because you’re solving the real fear: “this will mess up our CRM.”

Agents that can write without controls will eventually poison your systems.

If your CRM becomes unreliable, your automation becomes worthless.

Data quality gates, confidence tiers, dedupe rules, and audit logs turn agents into operators you can trust.

Neuronex Intel

System Admin