AI Agent Failure Recovery 2026: How to Design Agents That Don’t Loop, Panic, or Break Your Systems

Why failure recovery is the difference between a demo and production

In demos, tools work, inputs are clean, and the agent looks like it’s powered by destiny.

In production:

- APIs rate limit

- users send messy inputs

- documents are incomplete

- tools return partial data

- external systems change

- the agent misinterprets intent

- retries multiply until your bill screams

A production agent needs something most “agent builders” ignore: failure recovery design.

The 5 failure modes every agent hits

Tool failure

Timeouts, rate limits, schema mismatches, invalid responses.

Missing data

User didn’t provide a key field, or the record is incomplete.

Ambiguity

Multiple valid interpretations of the request.

Policy conflict

The agent is asked to do something it shouldn’t do.

Looping

The agent retries because it thinks “one more step” will fix it.

If you don’t explicitly handle these, your agent will invent its own behavior. That behavior will be expensive and embarrassing.

The core rule: retries are not a strategy

The default “just try again” approach creates infinite loops.

A proper agent system needs:

- retry limits

- backoff rules

- failure classification

- fallback paths

- escalation triggers

If the agent fails twice in the same way, a third attempt is not optimism. It’s negligence.

A production failure recovery framework

Here’s the framework that works.

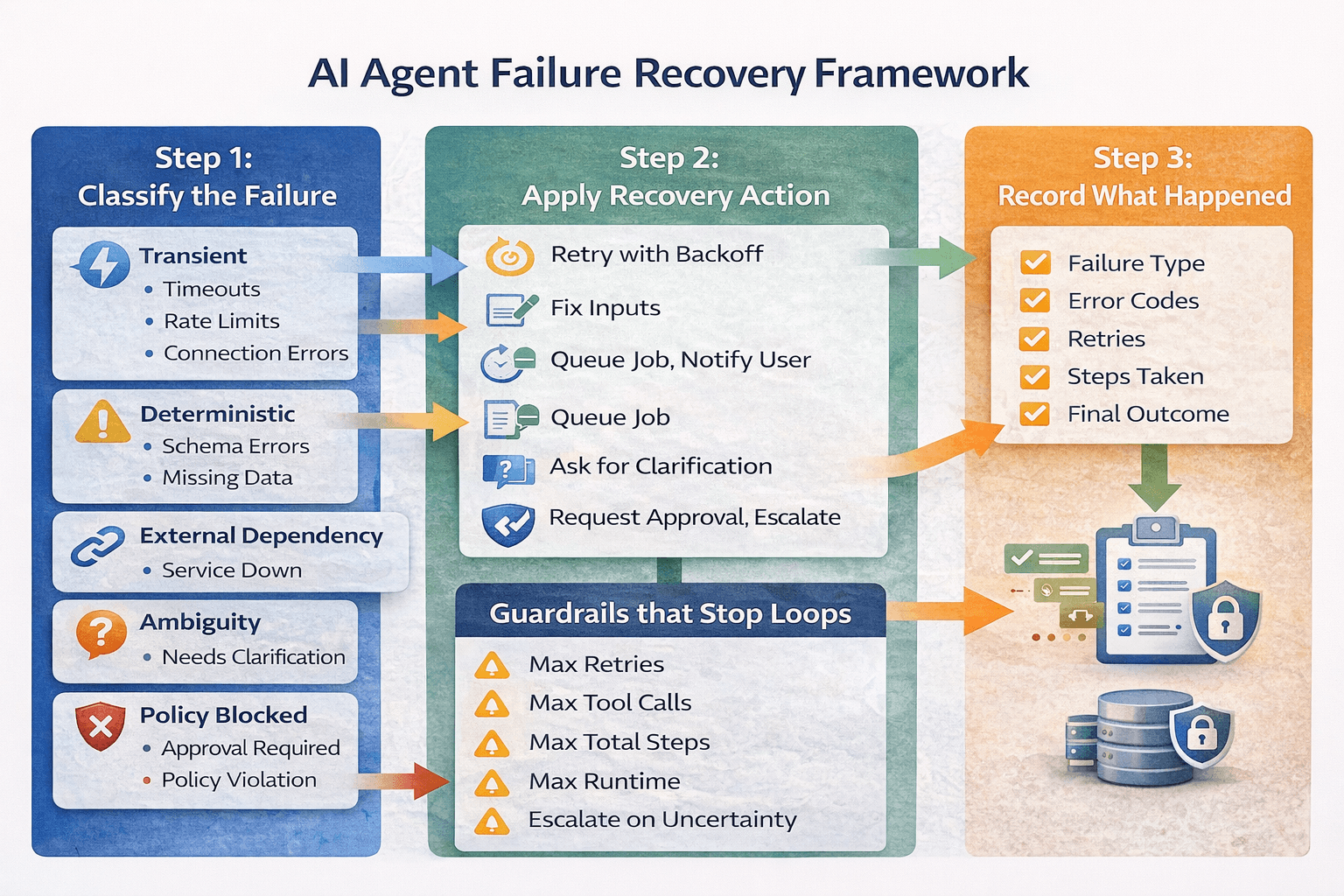

Step 1: Classify the failure

Every failure should map to a category:

- transient (timeouts, rate limits)

- deterministic (schema errors, missing fields)

- external dependency (service down)

- user ambiguity (needs clarification)

- policy blocked (requires approval or refusal)

Classification lets you choose the right recovery response.

Step 2: Apply the correct recovery action

Different failures require different behaviors.

- Transient failures

- retry with backoff

- switch endpoints or alternate tools

- reduce payload size

- Deterministic failures

- stop retrying

- fix inputs

- enforce structured outputs

- External dependency failure

- queue job for later

- notify user with status

- run a fallback workflow

- Ambiguity

- ask one precise question

- present a single recommended interpretation

- require confirmation before execution

- Policy blocked

- request approval

- escalate to human

- refuse execution with a safe alternative

Step 3: Record what happened

If you don’t log failure reasons, you’ll repeat them forever.

Log:

- failure type

- tool name

- error codes

- payload size

- retry count

- recovery action taken

- final outcome

This is how you reduce failure rate over time.

How to stop agent loops permanently

Loops happen because the agent has no stop condition.

Add these controls:

- max tool calls per run

- max retries per tool

- max total steps per run

- max runtime per run

- “same error twice = escalate” rule

- “confidence below threshold = stop and ask” rule

Also design “terminal states” like:

- needs input

- needs approval

- queued for later

- failed safely

Agents need a place to land. Otherwise they spin.

Fallback strategies that actually work

A fallback is a controlled downgrade that preserves progress.

Examples:

- if retrieval fails, use a smaller set of trusted documents

- if a tool errors, switch to an alternate connector

- if a task is too complex, create a partial draft and escalate

- if external API is down, queue the action and notify

The goal is not perfection. The goal is safe progress.

Escalation is a feature, not a failure

The best agents escalate early when risk is high or inputs are missing.

Escalate when:

- the agent is uncertain

- the action is irreversible

- the workflow deviates from normal

- tools are failing repeatedly

- policy conflicts appear

A good agent is confident when it should be, and cautious when it must be.

The agency angle: resilience sells retainers

Failure recovery is how you justify ongoing fees.

Your productized offer becomes:

- build agent workflow

- add resilience controls

- implement fallbacks and escalation paths

- monitor failures weekly

- reduce failure rate monthly

Clients don’t want “AI.” They want automation that doesn’t break at 2am.

Agents that don’t handle failure become loop machines: expensive, unreliable, and impossible to trust.

Design failure recovery from day one and your agent becomes a system: stable, safe, and scalable.

Neuronex Intel

System Admin