AI Agent Governance: The Operating Model That Stops Automation From Turning Into Chaos

Why governance matters more than “better agents”

Most businesses don’t fail with agents because the model is dumb. They fail because nobody owns the system.

Symptoms of no governance:

- agents get deployed by random teams with no standards

- workflows overlap and conflict

- data gets updated inconsistently

- approvals are inconsistent across departments

- incidents happen and nobody knows who’s responsible

- the agent “works” but leadership doesn’t trust it

Governance is what turns agent automation into a real capability instead of scattered experiments.

What AI agent governance actually is

Agent governance is the operating model that defines:

- who can deploy agents

- what agents are allowed to do

- what data they can access

- what actions require approvals

- how changes get shipped safely

- how incidents are handled

- how performance is measured over time

It’s not paperwork. It’s guardrails plus accountability.

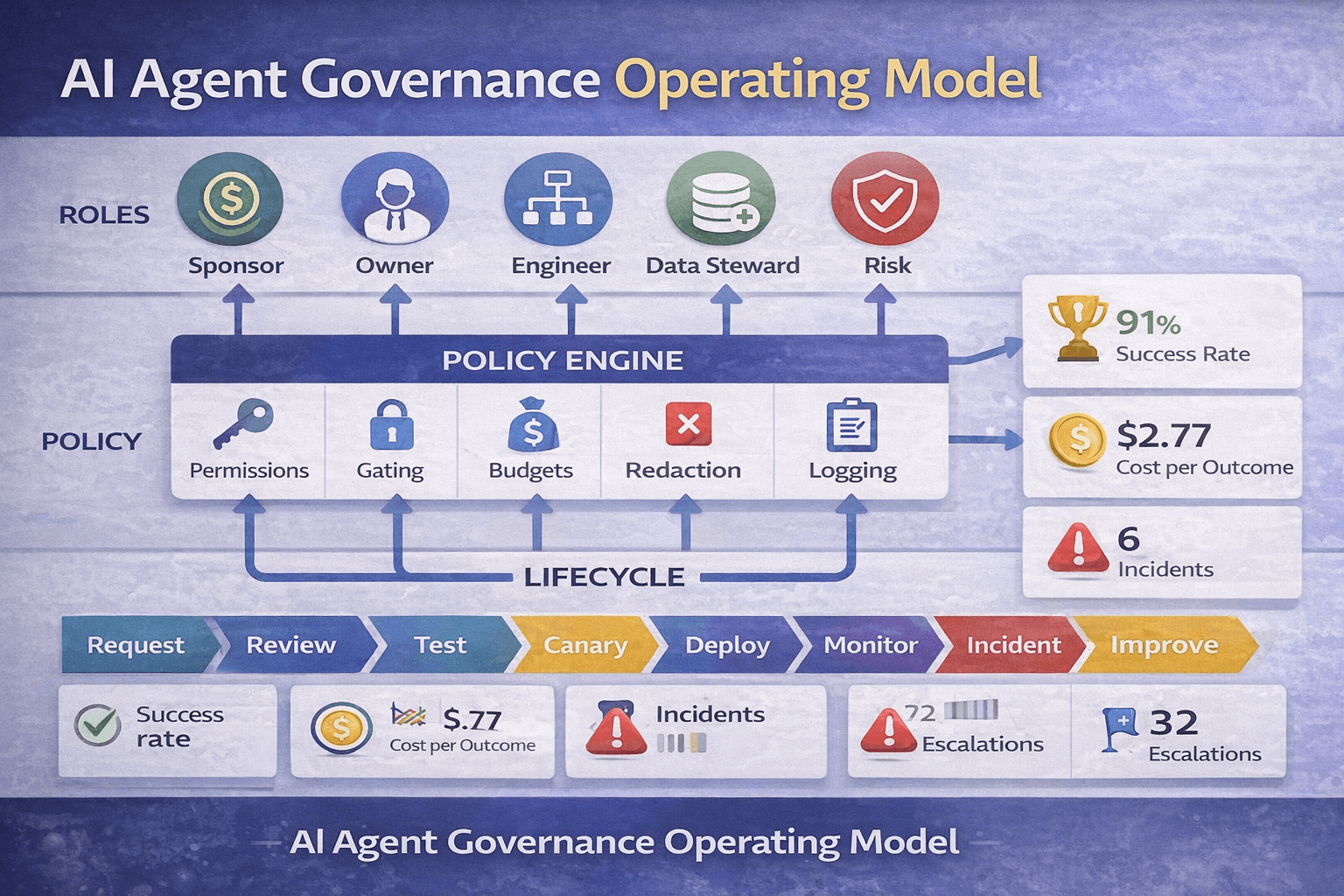

The five roles every agent program needs

If you don’t assign these roles, you’re building a mess.

Executive sponsor

Owns the business outcome and budget. Stops political fights.

Agent owner

Accountable for performance, risk, and ongoing changes.

Workflow engineer

Builds orchestration, tools, validations, and deployment logic.

Data steward

Owns entity definitions, field quality, dedupe logic, and “source of truth” rules.

Risk and compliance reviewer

Defines what needs gating, what’s disallowed, and what requires audit.

One person can wear multiple hats in a small org, but the responsibilities must exist.

The policy engine: the rules that control agent behavior

Governance becomes real when you implement policy as a system, not a document.

A policy engine defines:

- allowed tools per agent role

- read vs write restrictions

- high-risk action gating

- rate limits and budgets

- data redaction requirements

- tenant isolation rules

- logging and retention rules

If the policy is only “in the prompt,” it’s not governance. It’s wishful thinking.

The three-tier governance model that scales

Tier 1: Low-risk automation

- read-only retrieval

- drafting outputs

- internal summaries

- Minimal governance overhead.

Tier 2: Controlled execution

- writes to systems with validation

- approvals for risky steps

- This is where most businesses should live.

Tier 3: Autonomous execution

- agent runs end-to-end

- strict budgets, strict audit logs, strict monitoring

- Only for workflows with low downside and high repetition.

The goal is controlled scale, not “full autonomy” for bragging rights.

The governance workflow for shipping and changing agents

Agents change often. That’s why governance needs a shipping system.

A simple governance pipeline:

- request: new workflow or change proposal

- review: policy checks and risk scoring

- test: run evaluation suite and cost regression

- rollout: canary release with monitoring

- audit: record versions, approvals, and outcomes

You already have pieces of this in other posts. This is the operating model that ties them together.

Incident handling for agent failures

If your agent sends the wrong thing or updates the wrong record, what happens next?

Governance requires:

- defined severity levels

- automatic rollback capability where possible

- incident owner assigned immediately

- audit log review and root cause

- policy updates to prevent recurrence

- follow-up: evaluation cases added so it never repeats

This is how you turn failures into compounding improvements instead of recurring nightmares.

The scoreboard: what governance measures

Governance without metrics is theatre.

Track:

- outcomes (booked calls, tickets closed, tasks completed)

- success rate per workflow

- escalations and approvals

- rollbacks and incidents

- cost per successful outcome

- time-to-completion

- data quality impact (duplicates created, invalid writes blocked)

If metrics aren’t improving, your automation is not a system. It’s a hobby.

The agency angle: governance is your retainer product

Clients don’t just need an agent. They need a program.

You sell:

- governance setup (roles, policy engine, approval design, audits)

- workflow standards (schemas, validations, deployment process)

- monitoring and incident response

- monthly optimization and expansion roadmap

That’s how you move from “build a thing” to “own the automation layer.”

AI agents don’t scale through intelligence alone. They scale through governance.

If you want automation that leadership trusts, you need an operating model: ownership, policy enforcement, controlled rollout, auditing, and measurable performance.

That’s governance. That’s how agents become infrastructure.

Neuronex Intel

System Admin