AI Agent Testing 2026: How to Build an Evaluation Harness That Prevents Silent Failures

Why agents fail silently

Normal software fails loudly. An endpoint errors. A test fails. A service goes down.

Agents fail quietly. They still produce output, but:

- the tool call is subtly wrong

- the extraction misses fields

- the plan drifts

- the message tone degrades

- the agent loops more than before

- cost per run doubles

- outcomes drop without anyone noticing

If you don’t test agents continuously, you’re basically running production on vibes.

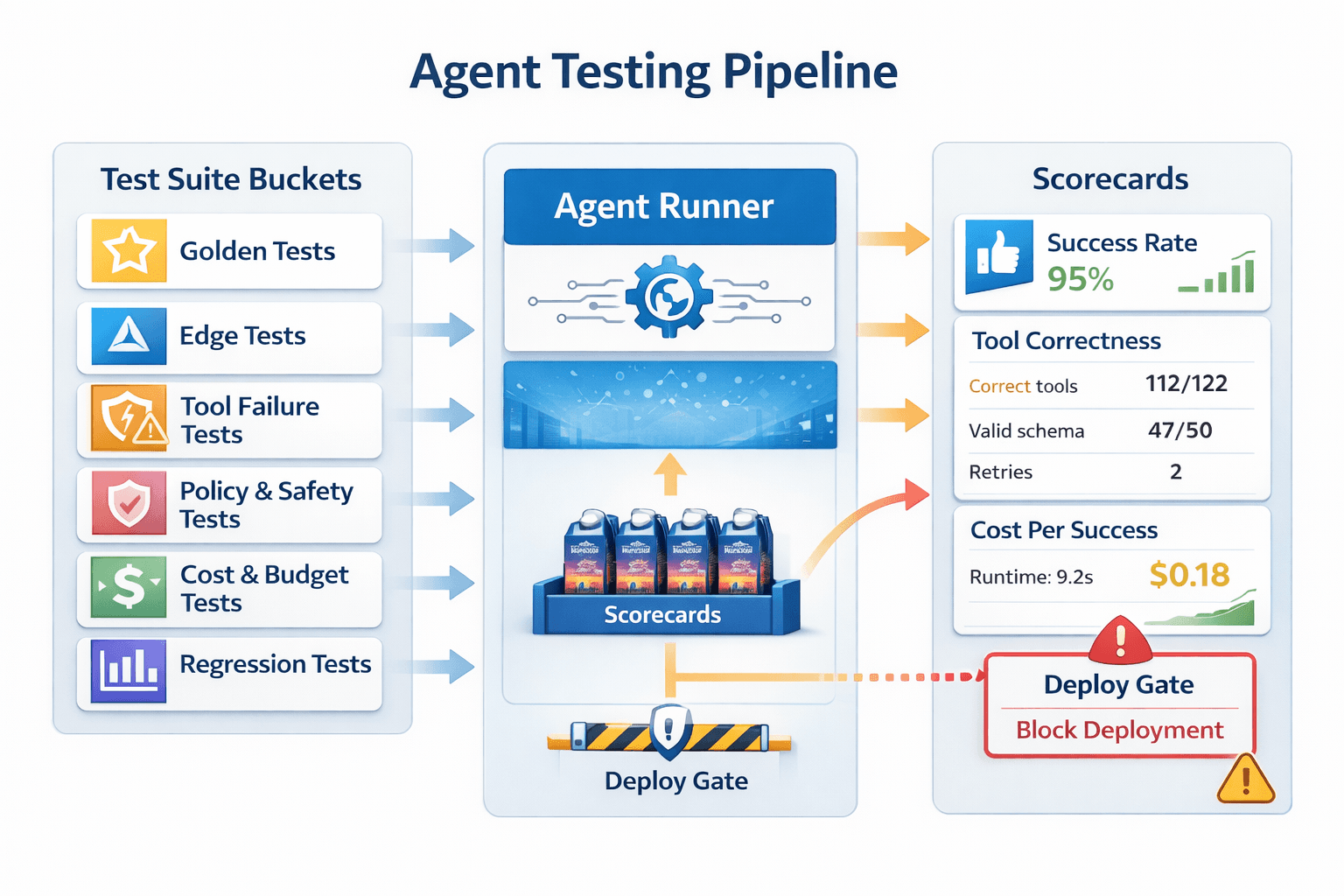

What an “agent evaluation harness” actually is

An evaluation harness is a repeatable test system that runs your agent on a fixed set of scenarios and scores the results like real QA.

It answers:

- did the agent complete the task correctly

- did it call the right tools in the right order

- did it stay within budgets

- did it follow policies

- did it produce valid structured outputs

- did it achieve the business outcome

This is not optional if you want agents that survive past week two.

The 6 test types every production agent needs

Golden path tests

The standard expected cases your agent should nail every time.

Edge case tests

Messy inputs: missing fields, ambiguous intent, broken formatting, weird attachments.

Tool failure tests

Simulate rate limits, timeouts, invalid responses, partial data returns.

Policy and safety tests

Anything sensitive: approvals, restricted actions, forbidden outputs, privacy constraints.

Cost and budget tests

Token caps, tool call caps, retry limits, maximum runtime.

Regression tests

Run the same cases after any change: prompts, tools, models, retrieval, routing logic.

What you should measure

If you measure the wrong thing, you optimize the wrong thing.

Track these per test run:

Outcome success

- completed vs failed

- partial success

- escalation required

- rollback required

Tool correctness

- correct tools called

- arguments valid

- schema valid

- tool call order correct

- retries and failure recovery

Output validity

- structured output parses

- required fields present

- formatting correct

- policy compliance

Reliability and drift

- success rate changes over time

- increased retries

- increased escalations

- changed tool patterns

Cost per success

This is the killer metric:

- cost per run

- cost per successful outcome

- time per completion

- cache hit rates if you use caching

If cost per success worsens, your “upgrade” was actually a downgrade.

How to build the harness in a practical way

Step 1: Capture real scenarios

Pull 30 to 100 real tasks from production:

- typical requests

- borderline requests

- failures you already saw

Step 2: Define success criteria per scenario

Not “looks good.” Actual checks:

- required fields extracted

- correct status written to CRM

- correct ticket category assigned

- message meets tone rules

- approval requested when required

Step 3: Run the agent in a controlled mode

- fixed prompt version

- fixed tool schemas

- fixed retrieval settings

- deterministic tool mocks where possible

Step 4: Score and store results

Store:

- run traces

- outputs

- tool calls

- costs

- pass/fail per criterion

Step 5: Add a gate to deployment

If regression tests fail, you don’t ship changes. Simple.

Why this is a goldmine for agencies

This becomes a premium service fast.

Most clients don’t just need an agent. They need:

- confidence it won’t break

- confidence cost won’t explode

- confidence compliance rules won’t be violated

- confidence performance improves over time

So you sell:

- “Agent QA + Regression Suite Setup” (one-time build)

- “Monthly Monitoring + Optimization” (retainer)

This is the difference between being a builder and being the operator they keep.

If you don’t test agents, you don’t have automation. You have a risk generator.

An evaluation harness is how you catch drift, prevent silent failures, control cost, and scale agent systems like real software.

Neuronex Intel

System Admin