DeepSeek V3.2: Open Sparse Attention That Punches At GPT 5 Levels

DeepSeek V3.2

DeepSeek V3.2 is the open model everyone keeps pretending not to notice - mostly because it makes a lot of closed systems look overpriced.

The V3.2 and V3.2 Speciale releases build on DeepSeek’s earlier V3 line with a new sparse attention mechanism, long context support and strong reasoning performance. Independent coverage has framed V3.2 as “rivaling GPT 5” in coding and math, while running at lower cost thanks to more efficient attention and infrastructure support.

DeepSeek Sparse Attention and long context

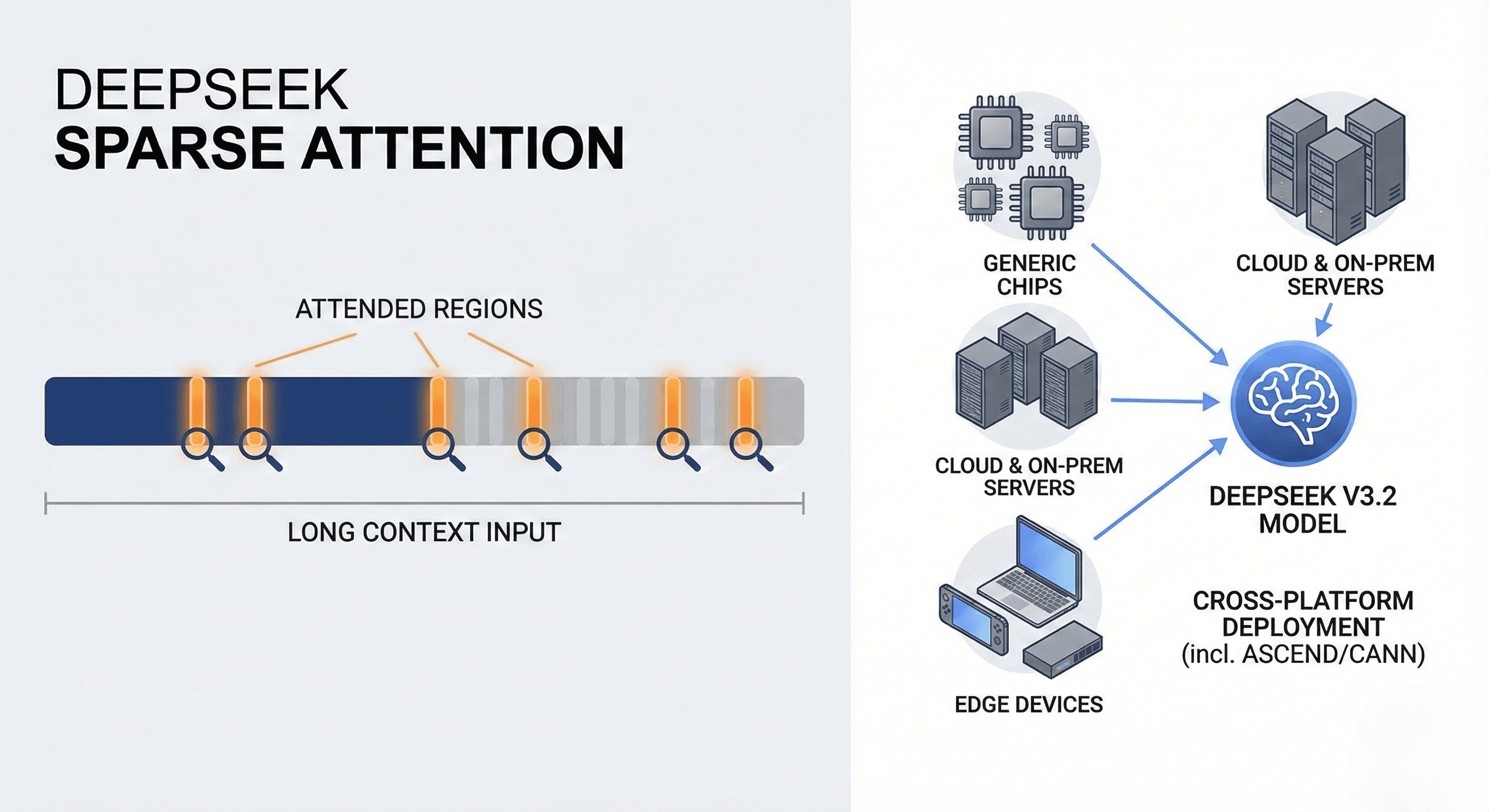

The key technical move in V3.2 is DeepSeek Sparse Attention (DSA). Instead of attending to every token, the model learns to focus on the most relevant parts of long inputs, cutting compute and memory use while preserving accuracy.

This matters because:

It enables long context inference without needing absurd amounts of hardware.

It keeps latency and cost manageable for workloads like large document analysis and multi step tool use.

It opens the door to deployment on non Nvidia hardware, including domestic chips in China via platforms like Huawei Ascend and CANN.

Tool use with integrated thinking

DeepSeek V3.2 is also positioned as an agent friendly model. Official docs describe it as the first in their line to integrate “thinking in tool use”, letting the model reason explicitly while it calls APIs, tools and external services.

In practice, this means:

Better planning before tool calls.

Fewer broken tool invocations and mismatched schemas.

More coherent multi step workflows for coding, research and automation.

For an AI agency, that is exactly the behavior you want when wiring a model into systems like n8n, custom backends or multi agent frameworks.

Open, powerful and politically controversial

DeepSeek ships V3.2 variants under permissive open source licenses, making them usable for commercial products, on premises deployments and customized fine tuning.

That openness has triggered political fallout. US government bureaus have already banned DeepSeek tools on official devices, citing national security and data privacy concerns. Several states followed with their own restrictions.

For businesses, the lesson is simple:

Technically, V3.2 is a serious contender for reasoning heavy workloads.

Legally and politically, you must check your compliance constraints and jurisdictions before betting your stack on it.

Where DeepSeek V3.2 fits in your strategy

If your environment allows it, V3.2 is a strong option for:

Cost sensitive reasoning workloads where GPT 4.5 or proprietary frontier models are too expensive.

On premises or regional deployments where you need to avoid specific cloud providers or hardware.

Research, coding and math heavy tasks that benefit from strong step by step reasoning.

The smart move is to treat DeepSeek as one contender in a multi model portfolio. Benchmark it honestly against GPT, Gemini, Claude and Mistral on your tasks, then assign it where it wins.

Neuronex Intel

System Admin