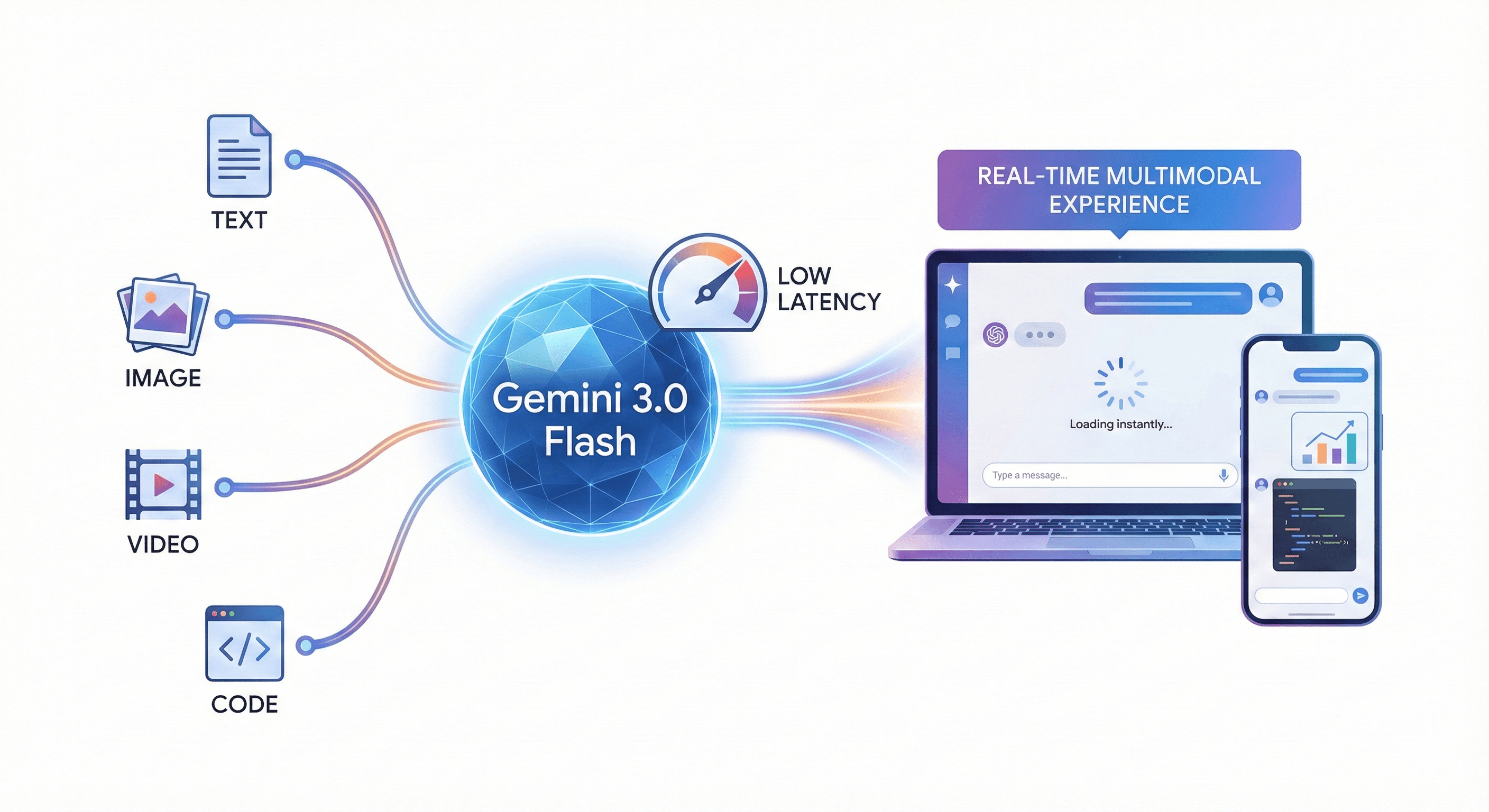

Gemini 3.0 Flash: Real Time Multimodal Intelligence For Agentic Apps

Gemini 3.0 Flash

Gemini 3.0 Flash is what happens when a model is designed for speed and reasoning at the same time. It sits in the Gemini 3 family as the high speed, real time sibling to the heavier Pro variants, built to power interactive tools, agents and creative workflows that cannot afford lag.

Under the hood, Gemini 3 introduces Google’s strongest reasoning and multimodal stack so far, including support for long contexts and advanced tool use across text, images, video and code. Flash takes those capabilities and optimizes them for low latency on TPU v5p infrastructure so responses stay sub second for typical workloads.

What makes Gemini 3.0 Flash different

Most “fast” models cut out depth to gain speed. Gemini 3.0 Flash is explicitly designed not to do that. Compared with earlier 2.5 Flash variants, it adds:

- Improved multi step reasoning with planning loops baked in by default

- Stronger self correction so the model can revise intermediate steps before answering

- Expanded multimodal input coverage, including richer image and video understanding and support for emerging formats like 3D and geospatial data in the broader Gemini 3 family

- Tight integration with tool calling and multi agent setups

In practice, this means you can treat Flash as the “front line” of your application, handling live interaction and delegation, while heavier models or offline workers handle large background jobs if needed.

Built for real time, multimodal experiences

Gemini 3.0 Flash is tuned for scenarios where users expect instant feedback:

- Conversational editing: updating documents, slides or code based on natural language instructions while the user types

- Creative workflows: generating, refining and remixing images, copy and layouts in design tools and editors

- Agentic coding: powering IDE copilots, code explorers and dev tools that need quick, context aware suggestions

The broader Gemini 3 stack supports context windows up to 1 million tokens at the Pro level, which means Flash can participate in ecosystems where large shared context and long running projects matter, even if Flash itself is optimized for speed.

For developers, that opens the door to applications where:

- A fast Flash instance handles chat, UI and tool orchestration

- A deeper Pro instance handles heavy analysis, large document reasoning or long running plans

- Both share state through external stores and tools

Agentic behavior out of the box

Gemini 3.0 is not only about answering questions. It is designed to act as the engine behind agents that can plan and execute tasks across tools and services. Flash leans into that with:

- Native support for planning loops so the model can break down tasks into steps

- Built in verification passes that let it re evaluate intermediate reasoning and call tools again when needed

- Improved tool calling behavior that reduces failed calls and mismatched schemas

- Compatibility with agentic environments and IDEs like Antigravity, where multi agent coding workflows rely on fast, reliable model calls

For AI agencies and product teams, this is ideal for:

- Orchestrating multi tool workflows in backends and automation platforms

- Running decision making layers that decide which tool, document store or API to call next

- Building assistants that can actually complete tasks, not just suggest steps

Where Gemini 3.0 Flash fits in your stack

Think of Flash as the responsive layer for:

- Customer facing chat and support interfaces that need fast answers grounded in your data

- Internal copilots for operations, sales, marketing or product teams

- Creative tooling where every click expects a near instant generative update

- Agent coordinators that sit in the middle of many tools, webhooks and workflows

You can pair Flash with:

- File Search or custom retrieval systems for grounded answers over documents

- Background workers using larger models for offline analysis

- Application specific tools for CRM, ERP, ticketing, analytics and more

This separation of roles means you avoid overpaying for heavy models on every interaction while still delivering state of the art behavior where it matters.

Why Gemini 3.0 Flash matters now

The next wave of AI products will not be static chatbots. They will be responsive, multimodal interfaces that feel closer to real time collaboration with an intelligent system. That requires:

- Sub second responses

- Reliable multi step reasoning

- Strong integration with tools and data sources

- Multimodal understanding that does not slow everything down

Gemini 3.0 Flash is designed for exactly that intersection. If you are building interactive apps, agentic workflows or creative tools, it gives you a fast model that still thinks clearly, rather than a shallow “fast mode” that collapses on complex tasks.

Neuronex Intel

System Admin