GLM-4.6: China’s Flagship Reasoning Model For Agents, Coding And Multimodal AI

GLM-4.6

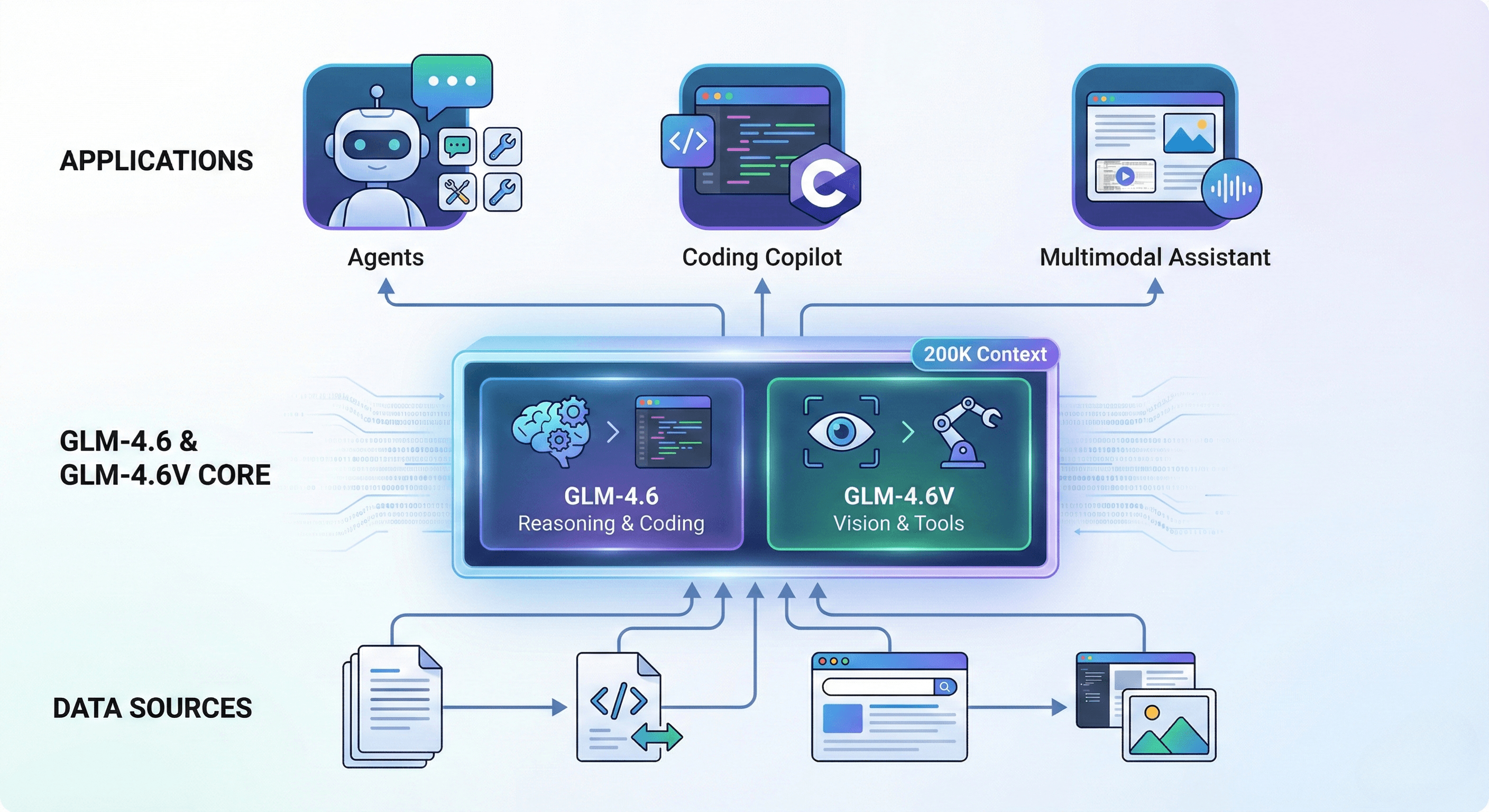

GLM-4.6 is Zhipu AI’s new flagship model, built as a deep-reasoning engine for agents, coding tasks and long-context problem solving. It continues the GLM line but pushes further with stronger logic, better multilingual skill and expanded context capacity that fits massive projects, multi-document workflows and complete agent traces.

GLM-4.6 is designed to compete with the newest mid-frontier models from the West, delivering high-level reasoning with lower token usage and more efficient planning. It positions itself as a model that can think for longer, act more reliably and integrate cleanly into coding tools, RAG systems and agent frameworks.

What GLM-4.6 brings to the table:

- 200K context window that supports deep multi-document reasoning

- Stronger coding capabilities across real project-level tasks

- Lower token usage than its previous generation

- Better step-by-step planning for agent workflows

- Optimized for bilingual environments in Chinese and English

- Built for tool use, retrieval and structured agent actions

GLM-4.6 also expands into multimodal AI through its companion models GLM-4.6V and GLM-4.6V-Flash. These handle images, documents and visual tasks while maintaining fast inference and strong accuracy. The multimodal series supports visual inputs, screenshot understanding, document parsing and tool use that mixes text and images fluidly.

What the multimodal side adds:

- Vision-language fusion for images, screenshots and documents

- Multimodal reasoning for web pages, charts and structured layouts

- Function calling from visual inputs

- Lightweight Flash versions for on-device or edge deployments

Developers, agencies and enterprises can use GLM-4.6 in several layers of their stack. It works as a reasoning brain for agents, a coding engine for dev tools, or a multimodal processor for apps that need to see and understand visual content. It can run via Zhipu’s API, partner hubs or local inference in optimized lightweight variants.

GLM-4.6 fits best in workflows that need:

- Strong reasoning for long chains of thought

- Reliable agent decisions and tool planning

- High-volume coding assistance

- Multilingual or cross-market deployments

- Visual + text pipelines inside one model family

- Scalable, multi-model routing systems for cost efficiency

Zhipu AI’s strategy is simple: deep reasoning, big context, lower cost, and full multimodal capability in a single ecosystem. GLM-4.6 shows the Chinese AI stack aiming directly at real enterprise workloads, not just benchmarks. It’s a model you test in a multi-model lineup, and where it wins, you route real traffic.

Neuronex Intel

System Admin