Human-in-the-Loop Agents 2026: The Approval System That Makes AI Autonomy Safe

Why “full autonomy” is the wrong goal

Full autonomy sounds sexy until your agent:

- emails the wrong client

- updates the wrong record

- refunds the wrong invoice

- publishes the wrong content

- escalates the wrong ticket

- books calls with unqualified leads

The real goal is safe autonomy: agents do 80 to 95% of the work, and humans approve the 5 to 20% that carries real risk.

That’s human-in-the-loop. Not because AI is useless, but because businesses have consequences.

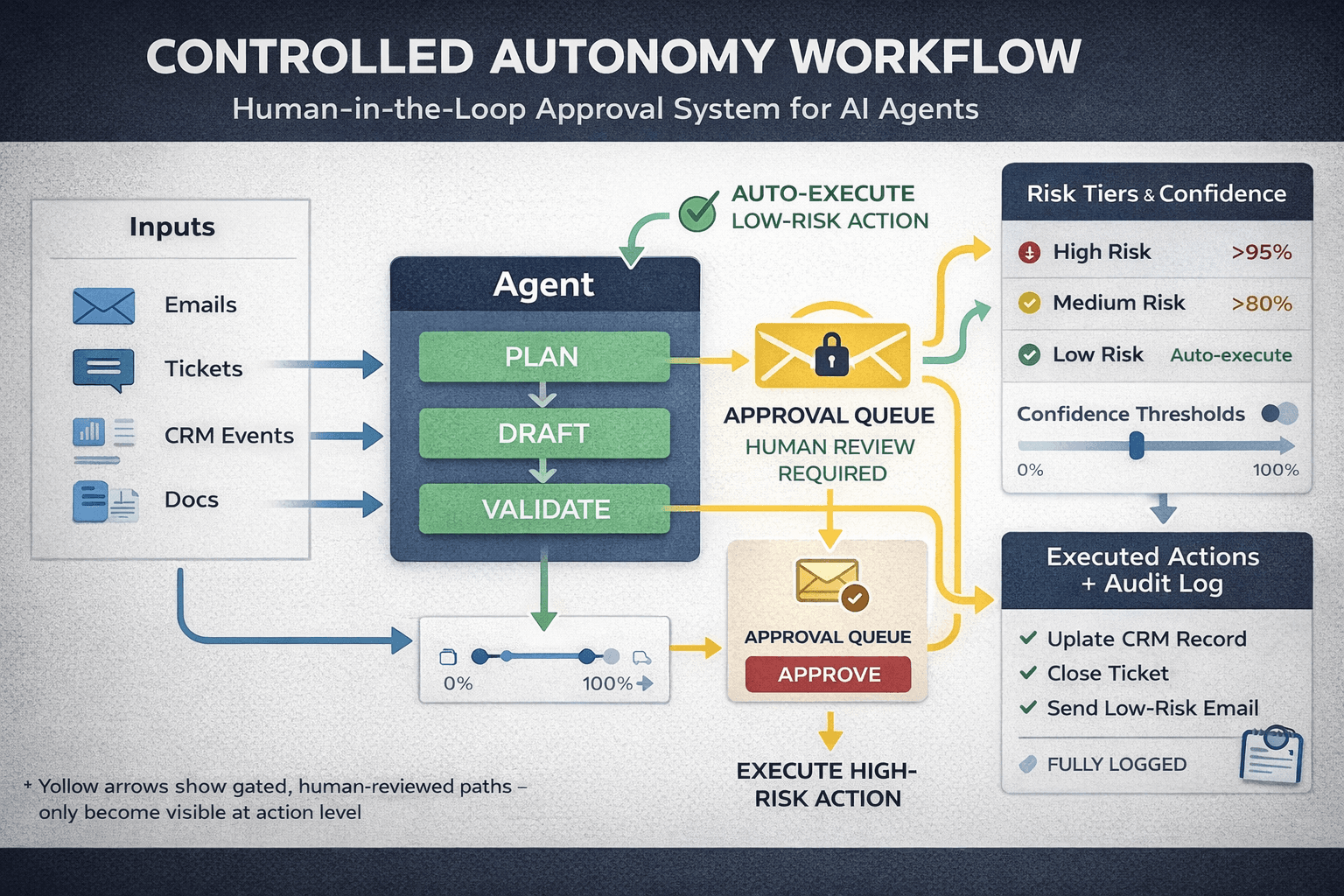

What Human-in-the-Loop actually means

Human-in-the-loop (HITL) is a control layer that decides:

- when an agent can act automatically

- when it must request approval

- when it should stop and ask for missing info

- when it should escalate to a human operator

HITL is not “the agent asks a human every time.”

HITL is “the agent only asks when it matters.”

The 3 levels of agent autonomy you should deploy

Assisted mode

- agent drafts and recommends

- human executes

- Best for: early deployments, regulated workflows, sensitive comms.

Guarded autonomy

- agent executes low-risk actions

- approval required for risky steps

- Best for: sales ops, support, marketing, internal ops.

Full autonomy with constraints

- agent runs end-to-end inside strict budgets and permissions

- only escalates on exceptions

- Best for: repetitive back office workflows with low downside.

Most businesses should live in guarded autonomy. It’s the sweet spot.

What actions should always require approval

If it’s irreversible or reputational, gate it.

Examples:

- sending external emails or DMs

- publishing public content

- issuing refunds or altering billing

- deleting records

- changing permissions or roles

- pushing code to production

- contacting high-value accounts

- legal, HR, medical, or compliance messaging

Let the agent draft everything. Let humans approve the dangerous parts.

The Approval System blueprint

This is the part people mess up. They either gate everything (kills ROI) or gate nothing (creates disasters).

A production HITL system includes:

Confidence thresholds

- agent provides a confidence score per action

- below threshold triggers approval or escalation

Action categories

- classify actions as low, medium, high risk

- map each category to approval rules

Two-step execution

- step 1: draft action (message, update, change set)

- step 2: approve and execute

Policy checks

Before execution, validate:

- required fields present

- values within allowed ranges

- permissions allowed for that tenant

- budget not exceeded

- tool call schema correct

Audit logs

Store:

- what the agent wanted to do

- what the human changed

- who approved

- when it executed

- This is how you debug and defend decisions later.

How HITL improves ROI instead of slowing you down

People assume approvals slow everything. Wrong.

HITL reduces:

- costly mistakes

- rollback work

- reputation damage

- compliance risk

- internal distrust

And it increases:

- adoption

- confidence

- scalability

- speed over time as approvals drop with better performance

The goal is to start with more approvals, then reduce them as you prove reliability.

How to sell this as an AI agency

This is a premium offer because it’s what executives actually want.

You sell:

- “We deploy safe agents with approval controls.”

- “We reduce human oversight over time.”

- “We log every action and prove performance.”

- “We build governance so you can scale without fear.”

Then you retain on:

- monitoring

- optimization

- reducing escalations

- improving confidence and automation rates

Real autonomy isn’t “no humans.”

Real autonomy is humans only when it matters.

If you want agents that survive in production, build a human-in-the-loop approval system from day one. It’s the difference between scaling automation and scaling disasters.

Neuronex Intel

System Admin