Kling O1: The Unified Multimodal Video Engine For Serious Creators

Kling O1

Most AI video tools still feel like a patchwork of half connected modes: one model for text to video, another for editing, another for style transfer. Kling O1 is trying to kill that entire mess.

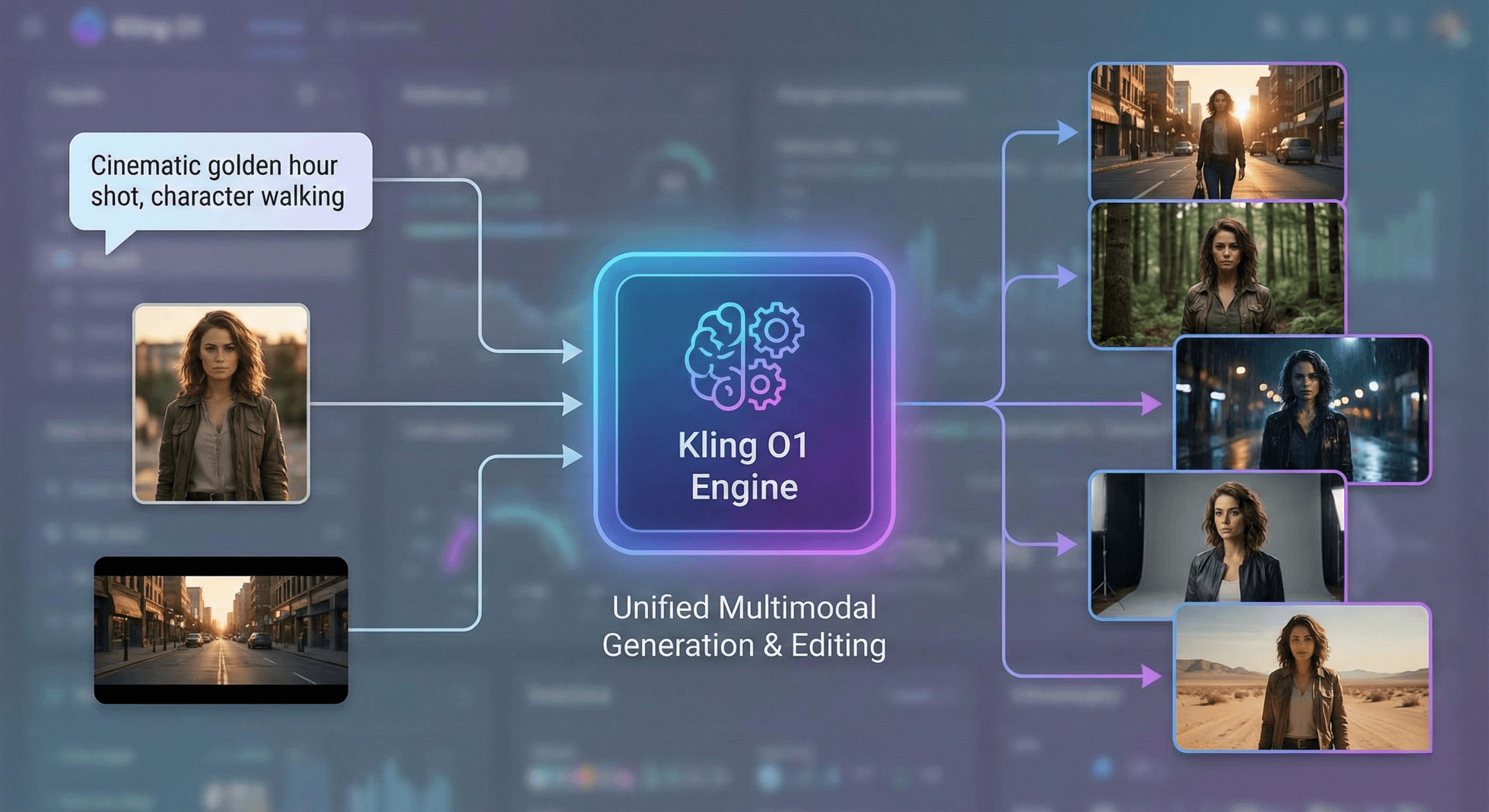

Positioned as a unified multimodal video engine, Kling O1 handles generation and editing in one model. You feed it text, images, reference clips, subjects and instructions, and it responds with cinematic 1080p video that can run from 3 to 10 seconds or longer while keeping characters and style consistent.

What Kling O1 actually is

Kling O1, often referred to as Video O1 or Omni One, is a next generation video foundation model built on an MVL (Multimodal Visual Language) architecture. Instead of treating text, images and video as separate channels, it processes them in a shared semantic space. Language describes intent, visuals act as rich references and the model reasons over both before drawing a single frame.

Concretely, Kling O1 supports in a single workflow:

- Text to video from scratch

- Image to video and subject based generation using one or more reference images

- Video to video restyling and transformation

- Object level editing, such as adding or removing subjects, changing outfits or replacing backgrounds

- Environmental changes like weather, lighting and time of day

- Next shot and shot extension to continue motion and story beats

Instead of switching tools for each of these steps, you stay in one engine and talk to it in natural language.

One model, many tasks

The key design idea behind Kling O1 is that creation and editing are variations of the same problem. That is why it is built as a unified multimodal model rather than a bundle of separate systems.

From a creator’s perspective, this means you can:

- Start with pure text to sketch a first shot.

- Upload an image to lock in character, outfit or product identity.

- Feed in a short clip and say “extend this shot down the street, same character, golden hour lighting”.

- Remove background crowds, swap weather, or change the visual style in a single prompt.

Because the model tracks multiple characters, props and locations inside the same semantic space, it can maintain identity and continuity across shots. That is crucial for narrative work, ads and branded content where viewers instantly notice when a face, logo or product suddenly changes.

Cinematic output with motion and consistency

Kling O1 targets cinematic quality and temporal coherence rather than just flashy frames. Official and third party descriptions highlight:

- 1080p output by default, with support for multiple aspect ratios suited to feeds, stories and widescreen.

- Strong subject consistency so characters and products remain stable across complex camera moves.

- Realistic motion and physics for elements like fabric, water, vehicles and crowd movement.

- Camera aware control to support pans, push ins, tracking shots and more.

This combination makes it suitable for:

- Short ads and performance creatives

- Social content with recurring characters or mascots

- Teasers and concept scenes for larger stories

- High quality product and lifestyle clips for ecommerce

A conversational post production workflow

Traditional editing workflows rely on masking, tracking, keyframing and plug in chains. Kling O1 moves a lot of that into a conversational interface.

You can say things like:

- “Remove the people in the background and keep the car untouched.”

- “Turn this daytime street into night with neon signs and light rain.”

- “Keep the main character’s outfit but change the environment to a snowy mountain town.”

- “Extend this shot by three seconds, same motion and camera direction.”

The engine interprets text and references together, then reconstructs pixels accordingly. You do not have to draw masks or animate every change by hand, which is a huge time saver for solo creators and small teams.

Where Kling O1 is available

Kling O1 is currently offered through several hosted platforms and APIs, including Kling’s own interface and partner services that provide image to video, text to video and advanced edit modes inside their tools.

For AI agencies and product teams, this makes it practical to:

- Embed Kling O1 into custom editors and pipelines via API.

- Offer “director in a box” features to clients without reinventing the video model.

- Build specialized workflows around ecommerce, UGC, performance marketing or narrative content.

As the ecosystem matures, expect more integrations inside no code tools, timelines, and collaborative creative platforms.

Kling O1 represents a shift from “model zoo plus plugins” to a single, reasoning based video engine that understands both language and visual references at once. The benefits are clear:

- Less tool switching and format juggling.

- Faster iteration from storyboard to final cut.

- Stronger identity and style consistency across all outputs.

- Lower barrier for individual creators to produce studio feeling clips.

If you are designing AI powered creative workflows, Kling O1 is the kind of engine that lets you promise end to end video capability instead of a collection of disconnected tricks. It turns prompts, images and rough clips into a coherent video conversation, where your instructions guide the story and the model handles the pixels.

Neuronex Intel

System Admin