Mistral 3: Open Models From Laptop To Edge To Frontier

Mistral 3

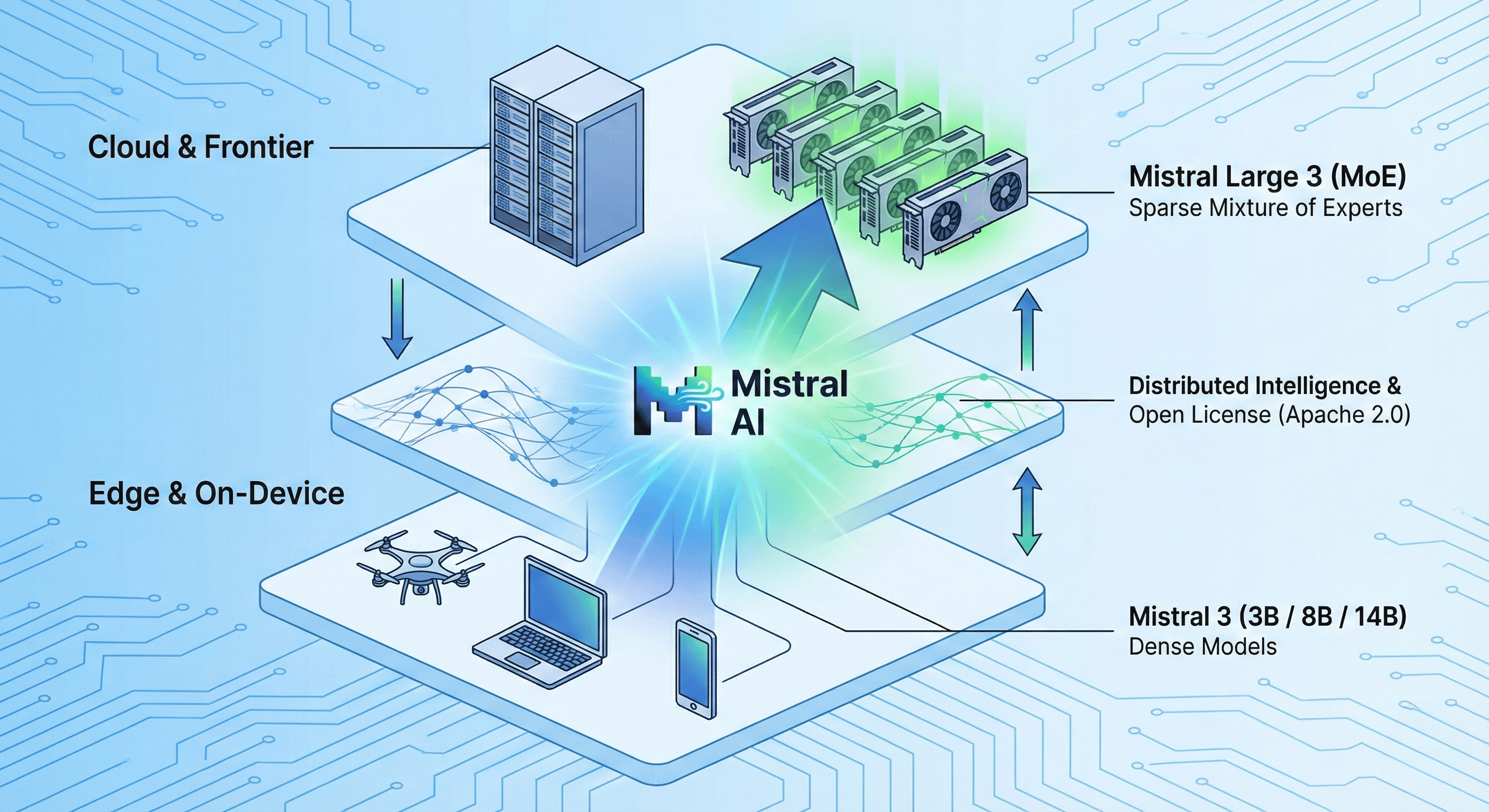

Mistral 3 is a full stack bet on open, distributed intelligence.

The new release is not just “one model”, but a family: three dense models at roughly 3B, 8B and 14B parameters plus Mistral Large 3, a sparse Mixture of Experts model with 41B active parameters and a 675B parameter pool. All of them ship under Apache 2.0, which is about as business friendly as it gets.

The goal is clear: make it possible to run serious language models everywhere - from laptops and drones to data centers and edge devices - using the same architecture and tooling.

The Mistral 3 lineup in plain terms

- Mistral 3 3B / 8B / 14B

- Compact, dense models designed for low latency and small footprints. Ideal for on device inference, embedded systems, local tools and applications where you do not want to call an external API for every token.

- Mistral Large 3

- Sparse MoE architecture with 41B parameters active per forward pass and a much larger expert pool. This is the “frontier level” member of the family, built for complex reasoning, enterprise copilots and heavier workloads, while still being optimized for efficiency.

All of these models come with compressed formats and tooling designed for practical deployment.

Optimized for NVIDIA and beyond

Mistral has worked with NVIDIA to optimize the Mistral 3 family for GPUs across cloud and edge, fitting nicely into the broader vision of distributed intelligence.

That means:

- Better throughput on common GPU setups.

- Easier integration into existing CUDA based infra.

- A cleaner path from experimentation to production for teams already running GPU clusters.

At the same time, the open weights and Apache licensing mean you can target other accelerator ecosystems and CPU heavy environments where needed.

Why Mistral 3 is important for builders

Three big advantages stand out:

- Freedom

- Apache 2.0 licensing gives you wide flexibility to embed, fine tune and redistribute models as part of your own products, without the typical legal headaches.

- Coverage

- With one family you can cover offline, local, edge and cloud workloads, instead of juggling unrelated models.

- Cost control

- You can selectively deploy the right size model for each use case - small models on edge devices, Large 3 for central reasoning services - and avoid overpaying for frontier power where you do not need it.

For an AI agency, Mistral 3 is perfect for projects where clients demand open, inspectable models and infrastructure control, especially in Europe and regulated industries.

Neuronex Intel

System Admin