Mistral Devstral 2: Repo-Scale Open Coding Engine For Autonomous Agents

Devstral 2

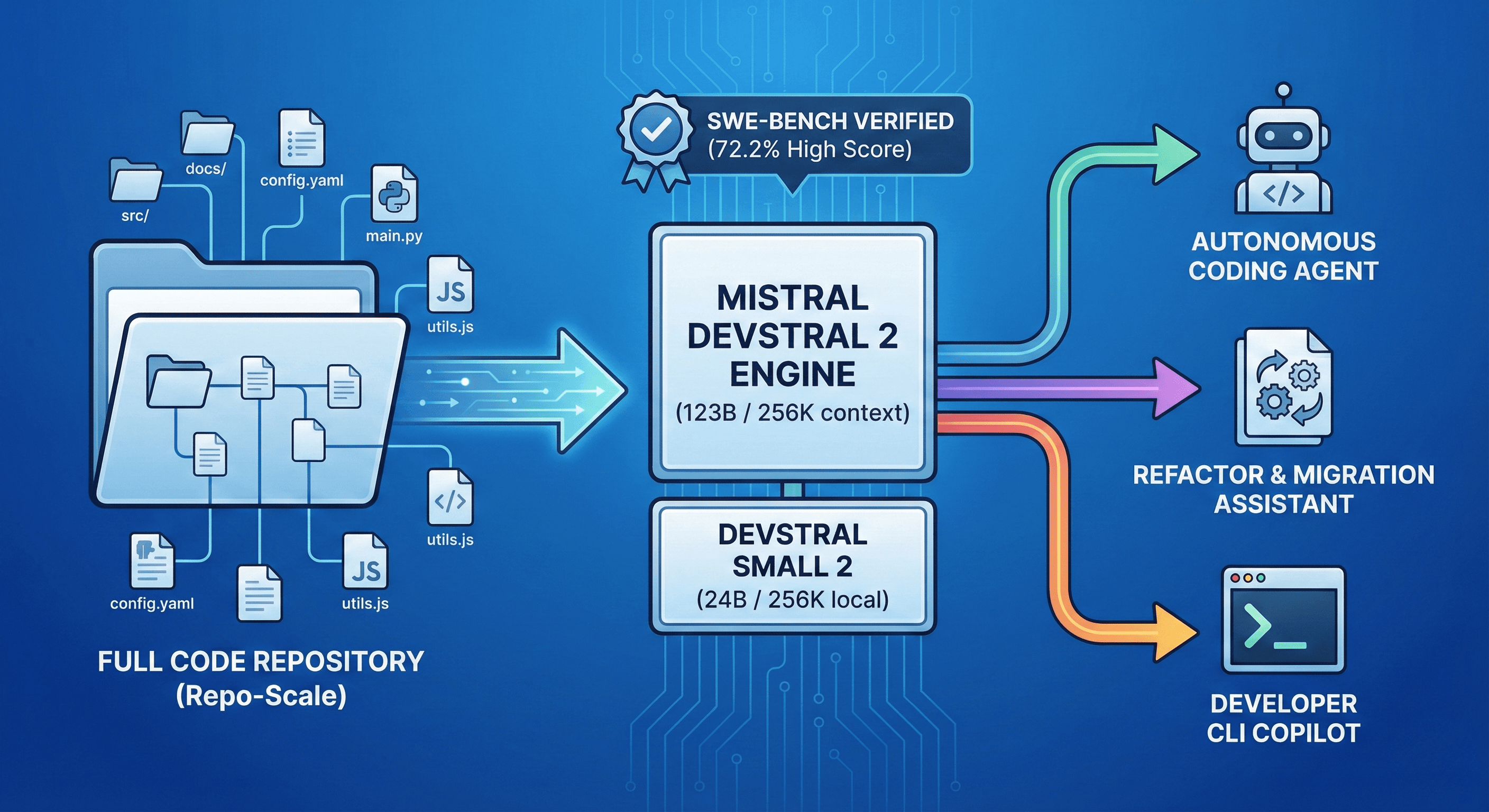

Most “AI coders” are autocomplete with good PR. Mistral’s Devstral 2 is built for something else: agents that can actually work across full repositories, fix real issues and keep going for hours without running into context walls.

Devstral 2 is a 123B parameter dense transformer with a 256K token context window, released as an open-weight coding model specifically tuned for long-context software engineering tasks. On SWE-Bench Verified, the benchmark that tests real GitHub issues across multi-file repos, it reaches around 72.2% accuracy, which puts it at the top of the open-weight coding stack and close to several proprietary giants.

Alongside it, Mistral ships Devstral Small 2, a 24B parameter variant that keeps the same 256K context but is light enough to run on a single high-end GPU or strong workstation, scoring roughly 68% on SWE-Bench Verified.

Together, they form a two-tier coding engine for everything from cloud-scale agents to local dev workflows.

What Devstral 2 actually is

Devstral 2 is not a generic chat model with some code training sprinkled on top. It is:

- A 123B dense coding model trained for repository-scale understanding

- Able to ingest and reason over up to 256K tokens in one context

- Targeted at autonomous or semi-autonomous coding agents, not just IDE completion

- Released as open weights under a modified MIT license, with Devstral Small 2 under Apache 2.0

That combination means you can hand it huge slices of a codebase, let it plan changes across files and keep the entire reasoning trace in-context without constantly truncating.

Why the 256K context actually matters

A long context window is not a flex, it is a capability. At 256K tokens, Devstral 2 and Small 2 can:

- Ingest large chunks of a monorepo, docs and config in a single session

- Track cross-file dependencies during refactors instead of guessing

- Maintain long-running agent loops that read code, run tools, update files and iterate without forgetting earlier steps

- Handle multi-issue debugging sessions where logs, traces and source all stay in view

For agentic workflows, this is the difference between “nice demo” and “practical engineer replacement for certain classes of tasks.”

SWE-Bench performance and why it matters

SWE-Bench Verified is brutal by design. It asks the model to:

- Read a real GitHub issue

- Inspect the repo

- Apply code changes across the relevant files

- Pass full test suites to confirm correctness

Devstral 2 hitting about 72.2% there means it is reliably fixing and implementing real features across large, messy codebases, not just writing clean toy functions in isolation. Devstral Small 2’s roughly 68% score at 24B parameters is equally significant, keeping pace with models up to five times its size.

In practice, this makes Devstral 2 one of the strongest open-weight coding models available, and Devstral Small 2 the most capable “local-scale” open model for repo-level work.

Two-tier deployment: cloud muscle and local control

Devstral’s design is clearly split into two deployment tiers:

- Devstral 2 (123B) for cloud, API and datacenter-scale inference

- Devstral Small 2 (24B) for local, on-prem or VPC environments

Because both share the same 256K context and similar behavior, you can design one agent architecture and deploy it at different power levels. For example:

- Run Devstral 2 in your main coding agent backend for the hardest, highest-value tasks

- Use Devstral Small 2 on developer laptops or internal servers for interactive work, offline coding assistants and privacy-sensitive codebases

Open-weight access means you are not locked into a single vendor. You can self-host, deploy in your own VPC, or mix API access with local inference.

What this unlocks

Devstral 2 is built for people who are not satisfied with “AI suggests a function and you paste it in.” It unlocks fully automated or human-in-the-loop pipelines that look more like a junior engineer with infinite patience.

With Devstral 2 and Small 2 you can realistically build systems that:

- Spin up agents which clone a repo, analyze architecture and propose structured change plans

- Apply multi-file edits and refactors while keeping project-wide context in memory

- Run tests, interpret failures and iterate automatically until green

- Use tools like ripgrep, linters, formatting and static analyzers as part of their loop

- Maintain long-lived sessions over a single codebase, with the entire conversation and change history in context

Because the models are cost-efficient relative to closed competitors, Mistral is openly positioning Devstral 2 as up to several times cheaper than premium proprietary coders for real workloads, while remaining competitive on quality.

Where Devstral fits in a modern AI stack

If you are serious about code automation, Devstral fits best in roles like:

- The main coding brain inside an autonomous agent stack

- A repo-scale refactoring and migration assistant

- A backend engine for “vibe coding” CLIs and terminal-first agents

- A local-first copilot for teams who do not want their entire codebase leaving their infra

The smart move is to put Devstral 2 and Small 2 in your evaluation pool alongside DeepSeek, Gemini, Claude and GPT-based coders, then route tasks based on strength and cost for your specific repos.

The key point: Devstral is not a toy. It is infrastructure. If you care about building serious coding agents over real repositories, ignoring it at this point is just leaving performance and cost savings on the table.

Neuronex Intel

System Admin