OpenAI Circuit Sparsity: The Open-Source Toolkit for Finding Task-Specific “Circuits” Inside Models

What “circuit sparsity” is trying to do

Circuit sparsity is the idea that a model’s behavior on a specific task can often be explained and reproduced by a much smaller subnetwork. Not “the whole model is small,” but “the part of the model doing this job is small.”

That matters because it gives you two big wins at once:

- interpretability: you can inspect what parts of the model matter for a task

- controllability: you can measure what breaks when you remove specific components

What the Circuit Sparsity repo actually gives you

This repo is a practical toolkit for working with sparse, task-specific circuits extracted through pruning.

It gives you:

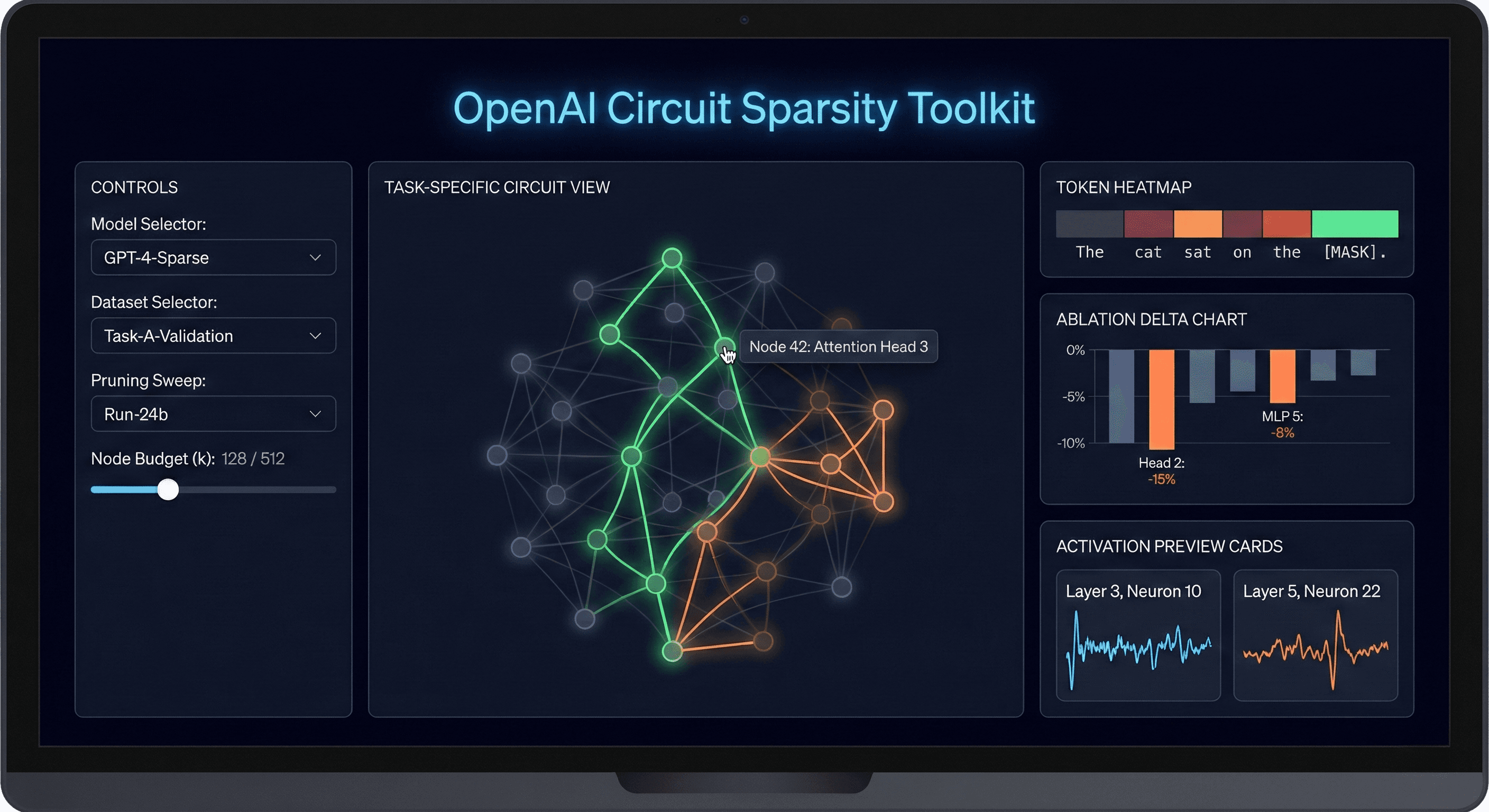

- a Streamlit visualizer that lets you explore circuits interactively

- code for running forward passes on the provided sparse models

- utilities for recording activations through hooks

- token-level visualization demos

- a structured data layout for models and visualization artifacts

- cache management utilities so you can refresh fetched artifacts cleanly

The Visualizer: what you can do inside the UI

The Streamlit app is the main “wow” factor because it makes circuit inspection usable without spending your life in notebooks.

Inside the visualizer you can typically:

- choose a model and task dataset

- pick a pruning sweep / experiment run

- set a node budget

k(how big the circuit is allowed to be) - inspect interactive plots (hover, click, drill down)

- view circuit masks, activation previews, and ablation deltas

- explore token-level behaviors where the model’s circuit “lights up”

This is exactly the kind of interface that makes mechanistic interpretability feel less like academic suffering and more like a real tool.

Running inference and capturing activations

The repo includes a lightweight GPT-style inference implementation and helpers for introspection.

Common patterns it supports:

- load a model from a directory of checkpoints/config

- run a forward pass to get logits and loss

- wrap execution in a hook recorder to capture internal activations

- analyze how activations change under pruning, masking, and ablation

If you care about “what changed” between two behaviors, this is the right kind of scaffolding.

Why this matters for builders and automators

Most AI systems fail in boring ways: silent regressions, inconsistent outputs, weird edge-case behavior, and “it worked yesterday” disasters.

Circuit sparsity tooling helps with that because it enables:

- debugging models by isolating the minimal components driving behavior

- auditing behavior changes after fine-tuning or prompt shifts

- understanding failure modes through targeted ablation, not guessing

- building evaluation cases that track which internal components matter

- turning model behavior into something you can inspect and explain

For automation-heavy teams, this is not just “interpretability research.” It’s a path to more reliable production behavior.

Real-world use cases that are not academic cosplay

Here’s where this gets practical fast:

- safety reviews: identify what internal pathways drive risky outputs on a task

- compliance narratives: produce clearer explanations of why a system behaved a certain way

- model compression research: explore whether sparse circuits can preserve task performance

- product QA: detect drift by monitoring circuit-level changes over time

- debugging agent workflows: when tools fail, isolate whether reasoning or retrieval changed

Quickstart: get it running locally

If you want a fast spin-up workflow, it’s basically:

- install the package in editable mode

- run the Streamlit visualizer

- run tests to verify inference code works

pip install -e . streamlit run circuit_sparsity/viz.py pytest tests/test_gpt.py

If you are working iteratively and want fresh artifacts, the cache clear utility is the kind of small detail that saves hours of confusion.

The bigger takeaway

Open-sourcing this toolkit is a signal: interpretability is shifting from “paper-only” to “developer-grade tooling.” If you can inspect a task-specific circuit, you can start treating model behavior like software behavior: observable, testable, and debuggable.

That’s the difference between “AI magic” and “AI engineering.”

Neuronex Intel

System Admin