Trainium 3: The AWS AI Chip Behind Cheaper Frontier Models

Trainium 3

Everyone obsesses over models. Hardware quietly decides who can afford to train them.

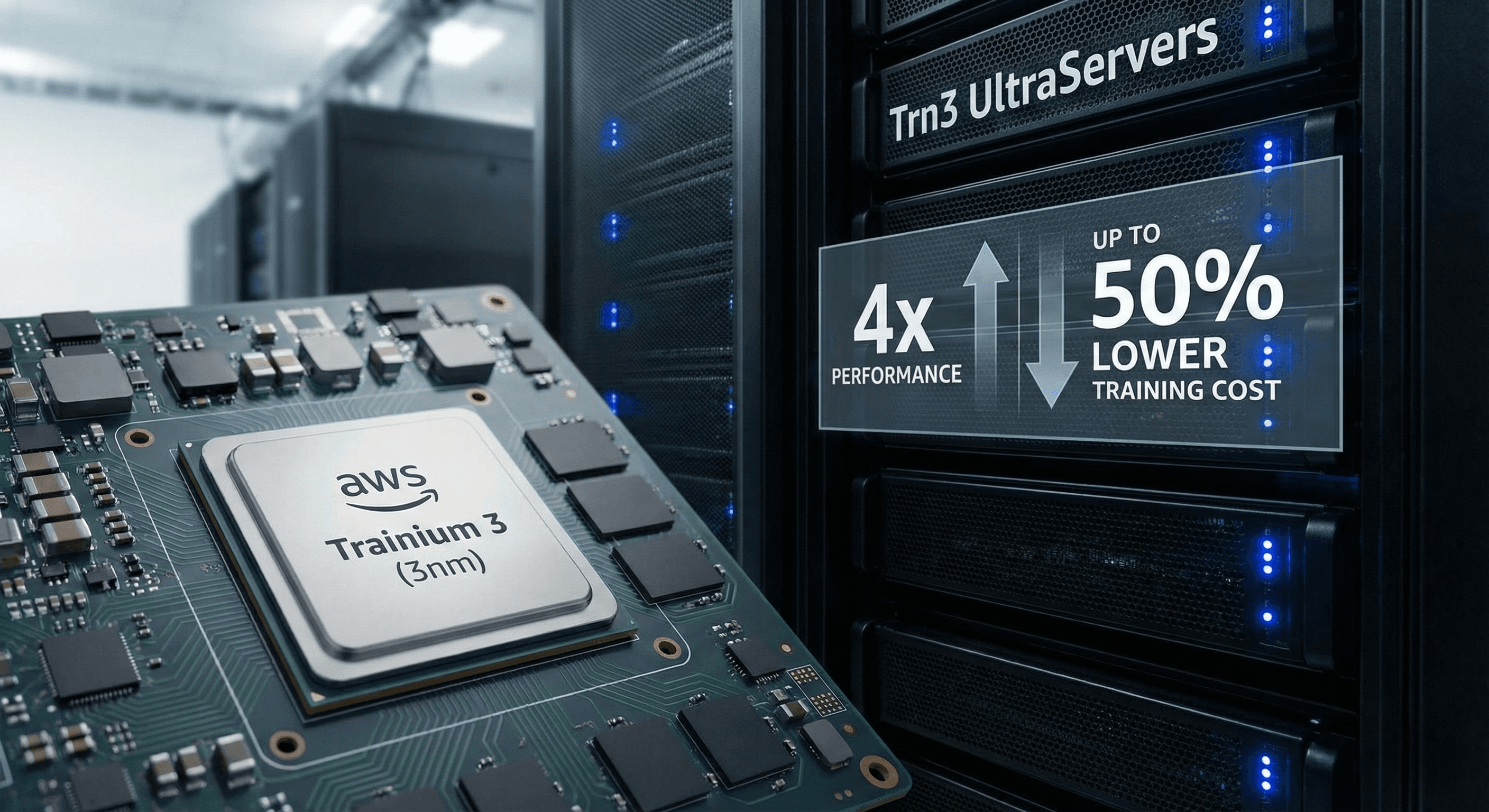

AWS’s new Trainium 3 chip is built to make large scale training and inference cheaper and faster for cloud customers. It is a 3 nanometer AI accelerator, powering the new EC2 Trn3 UltraServers, and it is already being used in production by companies like Anthropic.

AWS claims Trainium 3 delivers up to four times the performance of the previous generation and can cut training costs by around 50 percent for some workloads.

What Trainium 3 actually offers

From AWS announcements and coverage, Trainium 3 brings:

- Higher performance per chip

- More compute, better memory bandwidth and improvements tailored to transformer style workloads.

- Lower cost per trained token

- By boosting throughput and energy efficiency, it reduces the per step cost of training and large batch inference.

- Tight integration with Bedrock and EC2

- Trn3 UltraServers can be used via managed services or more directly on EC2, making it easier to plug Trainium into existing pipelines.

This positions Trainium 3 as an alternative or complement to GPU heavy setups, especially for companies already deep in AWS.

Who is using Trainium 3

Anthropic is one of the headline customers, reportedly using Trainium 3 to train and serve parts of its model lineup on Amazon Bedrock. Other enterprises are looking at Trainium to support heavy workloads in areas like:

- Healthcare imaging and genomics

- Biomarker discovery and molecular simulations

- Large scale clinical and scientific data processing

The point is not that GPUs disappear, but that the economics of frontier training change as new chips arrive.

Why Trainium 3 matters for AI builders

Even if you never touch bare metal, Trainium 3 affects you:

- Model prices

- If training and serving costs drop, API pricing and SaaS margins can shift. That opens the door for cheaper access to large models.

- ** deployment options**

- With more competition in accelerators, you can design architectures that target different chips for different workloads, balancing cost, latency and availability.

- Risk diversification

- Depending only on one vendor’s GPUs is a single point of failure. Trainium 3 gives AWS and its customers more leverage and redundancy.

For AI agencies, the key is staying hardware aware. You do not have to be a chip engineer, but you should know which providers are using Trainium, GPUs or other accelerators under the hood and how that might affect performance, pricing and regional availability.

Neuronex Intel

System Admin